Economics of Illusion: Selling the Dream

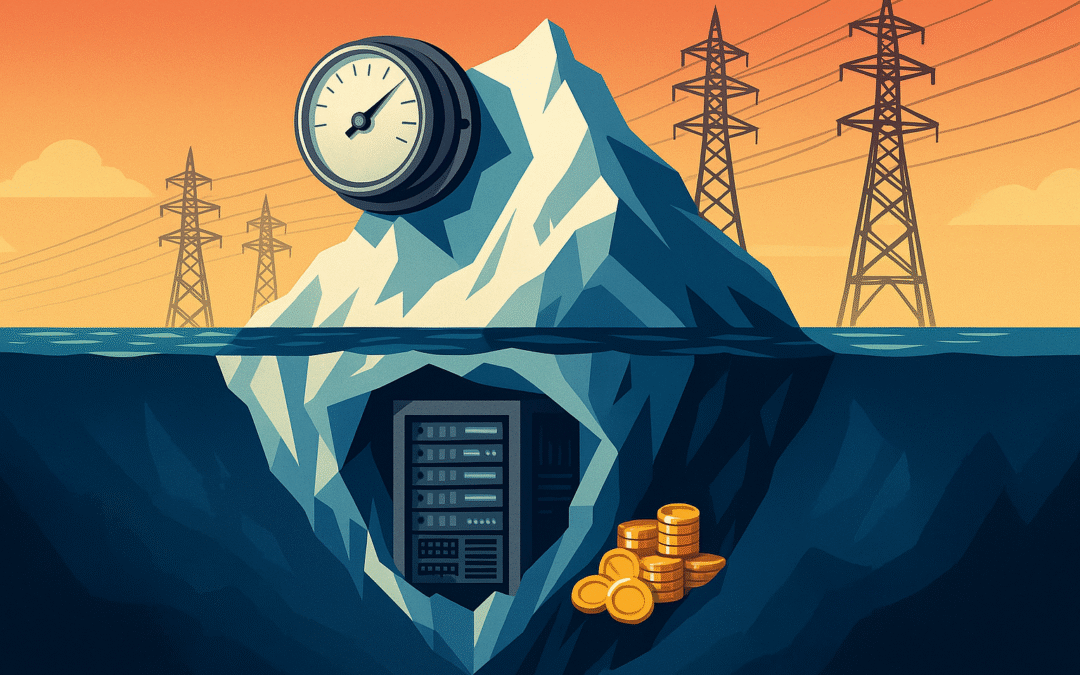

The AI reasoning revolution has created an industry-wide economic crisis where hidden reasoning tokens and infrastructure costs are driving unsustainable pricing models. From xAI’s Grok 4 with reasoning tokens costing $15 per million output tokens[1][2] to OpenAI’s GPT-o1 Pro at $600 per million[3], reasoning models consume 5-10x more computational resources through invisible “thinking” processes. Infrastructure costs have driven AI company margins down from 80-90% to just 50-60%[4][5], while exploitation of flat-rate subscriptions—where users consumed tens of thousands of dollars in compute for $200/month[6][7]—forced emergency pricing changes across the industry.

The core economic problem is clear: reasoning capabilities require exponentially more energy and computation[8][9] while companies attempt to maintain accessible pricing through unsustainable loss-leader models[10][4]. This fundamental mismatch between capability costs and pricing models threatens the democratization of advanced AI.

Industry-wide subscription abuse forces the end of unlimited models

The unlimited subscription era is effectively dead after documented cases of users exploiting flat-rate plans to consume massive computational resources[6][7]. Anthropic’s analysis revealed “one user consumed tens of thousands in model usage on a $200 plan”[6], while others ran Claude Code “continuously in the background, 24/7”[7]. This forced the implementation of weekly rate limits affecting less than 5% of users starting August 28, 2025[6][11].

Enterprise leaderboards reveal the scale of abuse. According to enterprise usage analytics, the top 1% of Claude Code users were consuming 400-800x more compute than median users[6][11]. Analysis of API usage patterns showed some accounts generating millions of reasoning tokens daily under subscription plans designed for typical user workflows[11]. These extreme users were effectively running commercial workloads through consumer subscription tiers.

OpenAI similarly restricts top-tier model access precisely because running GPT-o1 Pro and reasoning tokens are so expensive that unlimited access would be financially catastrophic[12][13]. ChatGPT Pro limits GPT-o1 Pro to 50 messages per week[13][14] despite the $200/month fee, while loss-leader pricing strategies are failing[4] as AI startups show gross margins of only 25%[15] compared to traditional SaaS margins above 75%[5].

The new reality creates dynamic pricing where what users receive for their dollar becomes unpredictable[11]. Users cannot forecast whether they’ll receive premium model performance or be automatically throttled based on system load and usage patterns.

Reasoning tokens create exponential cost increases across all providers

Grok’s “explosive thinking tokens” make it potentially the most expensive reasoning model available[1][16], with $15 per million output tokens doubling to $30 after 128k tokens[17][18]. Analysis shows reasoning models generate dramatically more internal computation than visible output suggests—Grok 3 can generate reasoning tokens costing 5x the visible output[16][18], while GPT-o1 can consume over 5,500 reasoning tokens for basic tasks[19].

Energy consumption reveals the computational reality. Research shows typical ChatGPT queries using GPT-4o consume roughly 0.3 watt-hours[8], but reasoning models require 10-30 times more energy per query[8][20]. Reasoning models generated far more thinking tokens—internal reasoning processes that consume massive computational resources while remaining invisible to users[20].

The quadratic scaling problem intensifies costs. For inputs of 100k tokens with 500 output tokens, energy costs jump to around 40 watt-hours[8]. Processing 1 million input tokens would be 100 times more costly than processing 100k tokens due to quadratic attention scaling[8]. This makes long-context reasoning prohibitively expensive for most applications.

Enterprise transparency issues compound the problem. Without clear visibility into reasoning token consumption, only 23% of enterprises can accurately predict monthly AI spend[21][22]. The unpredictability of variable costs creates major friction for budget planning and ROI calculations[22][5].

Infrastructure costs drive consumer subsidies and grid instability

Regular consumers now subsidize AI data center costs through rising electricity bills across the United States[23][24]. Americans are footing the bill with electricity prices rising 6.5% between May 2024 and May 2025, while some states report increases of 18.4% to 36.3%[24]. Utility companies fund infrastructure projects by raising costs for their entire client base[23], creating involuntary subsidies for AI development.

The scale of energy demand is staggering. The International Energy Administration estimates data center energy demand in the U.S. will increase by 130% by 2030[9]. Former Google CEO Eric Schmidt testified that data centers will require an additional 29 gigawatts by 2027 and 67 more gigawatts by 2030[9]. AI data centers consume 35% of Virginia’s electricity[23] while serving global users, creating fundamental geographic cost imbalances.

NVIDIA’s battery-equipped racks attempt grid stabilization but reveal the problem’s magnitude[25][26]. The new GB300 NVL72 systems include energy storage that can “smooth power spikes and reduce peak grid demand by up to 30%”[25][26], but AI data centers can swing from 20 MW at idle to 180 MW at full burst in milliseconds[27]. This creates grid instability that affects all consumers while retail electricity prices rise at a 9% annual rate—four times faster than overall consumer prices[28].

The subsidy burden is accelerating. Electricity prices could jump 15-40% in just five years[29], with prices potentially doubling by 2050[29]. The cost of adding capacity to power data centers is passed on to ordinary customers[28] who have no connection to AI services, creating a systematic wealth transfer from consumers to AI companies.

Enterprise decision makers face impossible cost-benefit calculations

AI companies achieve gross margins of just 50-60% compared to 80-90% for traditional SaaS[4][5], making sustainable pricing models nearly impossible. AI startups often have gross margins in the 50-60% range due to heavy infrastructure requirements[5], while some AI Supernovas have only 25% gross margins[15] and AI coding startups can have “very negative” gross margins[30].

Benchmarking costs reveal explosive growth across reasoning models:

- Grok 4: $15 per million output tokens (doubles after 128k)[17][18]

- GPT-4.5: $150 per million output tokens[31][32][33]

- Claude 4.1 Opus: $75 per million output tokens[34][35]

- GPT-o1 Pro: $600 per million output tokens[3]

The venture capital reality check is harsh. Analysis shows roughly half of every VC dollar in 2025 will be invested in AI companies[36], yet 67% of AI startups report infrastructure costs as their primary growth constraint[37]. The traditional strategy of attracting customers with below-cost pricing is failing as reasoning capabilities make the economics fundamentally unsustainable[4][15].

Enterprise decision frameworks now require complex cost-benefit analysis for each model choice, with organizations facing 6x+ cost premiums and unpredictable token consumption that makes budgeting impossible[3][5]. Many enterprises stick to older models for production applications despite inferior reasoning capabilities because the costs are predictable and manageable.

DeepSeek’s efficiency approach suggests alternatives exist

While the industry focuses on unprecedented scale and the most expensive model training[9], DeepSeek’s architectural innovations challenge the assumption that “bigger is better”[38][39]. DeepSeek’s V3 model offers input pricing at $0.27 per million tokens and output at $1.10 per million[9]—dramatically lower than competitors. GPT-4.5’s input price is $0.50 per million tokens and output at $8.00 per million[9]—over seven times DeepSeek’s comparable V3 output price.

The efficiency gains translate to energy savings. DeepSeek’s mixture of expert innovation means only 37 billion parameters need activation instead of all 671 billion parameters[9]. This architectural efficiency enables 50% price reductions during off-peak times[9] and demonstrates that dramatic cost reductions are possible through optimization rather than raw scaling.

However, the true training costs remain disputed. While DeepSeek claims $5.6 million in training costs[40][41], SemiAnalysis research suggests actual costs closer to $1.3 billion[41] when including research, hardware, and infrastructure expenses. The claimed efficiency excludes hundreds of millions in hardware investments[40] that enable the architectural optimizations.

DeepSeek’s innovations demonstrate alternatives to exponential cost scaling[39]. Their focus on architectural efficiency under semiconductor constraints forced innovations that Western companies haven’t pursued[42][39], suggesting different paths forward exist beyond the current unsustainable economics.

Conclusion

The AI reasoning revolution faces a fundamental economic crisis where computational demands exceed sustainable pricing models. Hidden reasoning tokens, infrastructure costs, and energy consumption create exponential expense growth while AI company gross margins collapse to 25-60%[4][5][15]—far below traditional software economics.

The unlimited subscription model is dead after users exploited flat-rate plans to consume tens of thousands of dollars in compute for $200/month[6][7]. Regular consumers now subsidize AI development through electricity bills rising 6.5-36.3%[24] while data center energy demand increases 130% by 2030[9]. NVIDIA’s grid stabilization efforts reveal the infrastructure strain[25][26], but consumers bear the cost burden.

Enterprise adoption faces impossible economics with GPT-o1 Pro costing $600 per million tokens[3] and reasoning models consuming 10-30x more energy[8][20] than traditional models. Only 23% of enterprises can predict AI spending[21][22], while 67% of AI startups cite infrastructure costs as their primary constraint[37].

DeepSeek’s alternative approach[38][39][9] demonstrates that architectural efficiency can reduce costs dramatically, but the industry remains focused on exponential scaling that drives unsustainable economics. Without fundamental changes in efficiency over raw computational scaling, the reasoning revolution risks pricing itself out of practical adoption while forcing ordinary consumers to subsidize development through higher energy costs.

The hidden costs represent a systemic crisis where capability promises disconnect from economic reality, infrastructure demands threaten grid stability, and pricing models fundamentally cannot support the computational requirements. The era of accessible AI reasoning is ending, replaced by complex usage-based models that make advanced AI capabilities a luxury accessible only to the most well-funded organizations.

Sources

[1] How Much Will Grok 4 Cost and What Should Developers … – Apidog https://apidog.com/blog/grok-4-pricing/

[2] Elon Musk’s AI company, xAI, launches an API for Grok 3 | TechCrunch https://techcrunch.com/2025/04/09/elon-musks-ai-company-xai-launches-an-api-for-grok-3/

[3] OpenAI o1-Pro API: Everything Developers Need to Know – Helicone https://www.helicone.ai/blog/o1-pro-for-developers

[4] AI Investment and Market Outlook in 2025 – LinkedIn https://www.linkedin.com/pulse/ai-investment-market-outlook-2025-eric-janvier-0z8be

[5] Financial KPIs for AI Startups to Measure & Improve – Burkland https://burklandassociates.com/2025/06/17/financial-kpis-for-ai-startups-to-measure-improve/

[6] Anthropic Introduces New Rate Limits for Paid Subscribers to Stop … https://sparklextechnologies.com/anthropic-introduces-new-rate-limits-for-paid-subscribers-to-stop-claude-code-usage-abuse/

[7] Anthropic unveils new rate limits to curb Claude Code power users https://techcrunch.com/2025/07/28/anthropic-unveils-new-rate-limits-to-curb-claude-code-power-users/

[8] How much energy does ChatGPT use? – Epoch AI https://epoch.ai/gradient-updates/how-much-energy-does-chatgpt-use

[9] Why AI demand for energy will continue to increase | Brookings https://www.brookings.edu/articles/why-ai-demand-for-energy-will-continue-to-increase/

[10] Loss Leader Pricing Strategy in AI Monetization – LinkedIn https://www.linkedin.com/pulse/loss-leader-pricing-strategy-ai-monetization-anbu-muppidathi-6jc3e

[11] Claude Code: Rate limits, pricing, and alternatives | Blog – Northflank https://northflank.com/blog/claude-rate-limits-claude-code-pricing-cost

[12] OpenAI Is A Systemic Risk To The Tech Industry https://www.wheresyoured.at/openai-is-a-systemic-risk-to-the-tech-industry-2/

[13] Is ChatGPT Pro Worth The $200 Per Month? – KDnuggets https://www.kdnuggets.com/chatgpt-pro-worth-200-month

[14] OpenAI is charging $200 a month for an exclusive version of its o1 … https://www.theverge.com/2024/12/5/24314147/openai-reasoning-model-o1-strawberry-chatgpt-pro-new-tier

[15] The State of AI 2025 – Bessemer Venture Partners https://www.bvp.com/atlas/the-state-of-ai-2025

[16] Grok 3 – Intelligence, Performance & Price Analysis https://artificialanalysis.ai/models/grok-3

[17] Grok 4 Initial Impressions: Is xAI’s New LLM the Most Intelligent AI … https://forgecode.dev/blog/grok-4-initial-impression/

[18] x.ai – Intelligence, Performance & Price Analysis https://artificialanalysis.ai/providers/xai

[19] O1 models hidden reasoning tokens : r/OpenAI – Reddit https://www.reddit.com/r/OpenAI/comments/1hrhdbp/o1_models_hidden_reasoning_tokens/

[20] How Much Energy Does AI Use? The People Who Know Aren’t Saying https://www.wired.com/story/ai-carbon-emissions-energy-unknown-mystery-research/

[21] AI Pricing: What’s the True AI Cost for Businesses in 2025? – Zylo https://zylo.com/blog/ai-cost/

[22] How transparency and outcome pricing are democratizing … https://xpert.digital/en/the-end-of-hidden-ai-costs/

[23] How Your Utility Bills Are Subsidizing Power-Hungry AI https://techpolicy.press/how-your-utility-bills-are-subsidizing-power-hungry-ai

[24] AI’s soaring energy consumption is causing skyrocketing power bills … https://www.tomshardware.com/tech-industry/ai-data-centers-soaring-energy-consumption-is-causing-skyrocketing-power-bills-for-households-across-the-us-states-reporting-spikes-in-energy-costs-of-up-to-36-percent

[25] Nvidia addresses AI peak power demand, spikes in new rack-scale … https://www.utilitydive.com/news/nvidia-rack-scale-system-smooth-ai-power/756279/

[26] How New GB300 NVL72 Features Provide Steady Power for AI https://developer.nvidia.com/blog/how-new-gb300-nvl72-features-provide-steady-power-for-ai/

[27] AI Data Center Power Smoothing – Why Is GrapheneGPU Different? https://www.skeletontech.com/skeleton-blog/ai-data-center-power-smoothing-why-is-graphenegpu-different?hsLang=en

[28] AI Is Power-Hungry – Paul Krugman – Substack https://paulkrugman.substack.com/p/ai-is-power-hungry

[29] Consumers shouldn’t subsidize the energy needs of data centers https://thedailyrecord.com/2025/06/20/ai-data-centers-raise-electricity-prices/

[30] AI Coding Startups Face Unexpected Financial Headwinds – Kukarella https://www.kukarella.com/news/ai-coding-startups-face-unexpected-financial-headwinds

[31] Why Is OpenAI’s Most Expensive Model Not Worth Its Premium Price? https://blog.laozhang.ai/ai-models/gpt-4-5-why-openai-most-expensive-model-not-worth-premium-price/

[32] Is GPT-4.5 API Price Too Expensive? A Quick Look – Apidog https://apidog.com/blog/gpt-4-5-api-price/

[33] r/OpenAI on Reddit: GPT-4.5 has an API price of $75/1M input and … https://www.reddit.com/r/OpenAI/comments/1izpgct/gpt45_has_an_api_price_of_751m_input_and_1501m/

[34] Claude Opus 4.1 – Anthropic https://www.anthropic.com/news/claude-opus-4-1

[35] Introducing Claude 4 – Anthropic https://www.anthropic.com/news/claude-4

[36] AI is hungry: What’s on the menu? | Wellington US Institutional https://www.wellington.com/en-us/institutional/insights/ai-is-hungry-whats-on-the-menu

[37] This Is What AI Commitment Looks Like: $392 Billion and Rising https://www.wisdomtree.com/investments/blog/2025/05/21/this-is-what-ai-commitment-looks-like-392-billion-and-rising

[38] Training AI for Pennies on the Dollar: Are DeepSeek’s Costs Being … https://www.sify.com/ai-analytics/training-ai-for-pennies-on-the-dollar-are-deepseeks-costs-being-undersold/

[39] DeepSeek’s AI Innovation: A Shift in AI Model Efficiency and Cost … https://blogs.idc.com/2025/01/31/deepseeks-ai-innovation-a-shift-in-ai-model-efficiency-and-cost-structure/

[40] [D] DeepSeek’s $5.6M Training Cost: A Misleading Benchmark for AI … https://www.reddit.com/r/MachineLearning/comments/1ibzsxa/d_deepseeks_56m_training_cost_a_misleading/

[41] “Deepseek’s AI training only cost $6 million!!” Ah, no. More like $1.3 … https://www.gregorybufithis.com/2025/02/07/deepseeks-ai-training-only-cost-6-million-ah-no-more-like-1-3-billion/

[42] WashU Expert: How DeepSeek changes the AI industry – The Source https://source.washu.edu/2025/02/washu-expert-how-deepseek-changes-the-ai-industry/

[43] LLM Pricing: Top 15+ Providers Compared in 2025 https://research.aimultiple.com/llm-pricing/

[44] Billing for Generative AI Companies: Unique Challenges and … https://www.goodsign.com/blog/invoicing-for-generative-ai-companies-unique-challenges-and-strategies

[45] Updating rate limits for Claude subscription customers – Reddit https://www.reddit.com/r/ClaudeAI/comments/1mbo1sb/updating_rate_limits_for_claude_subscription/

[46] AI Model Leaderboard: Top Models Compared 2025 – BytePlus https://www.byteplus.com/en/topic/420399

[47] LLM Leaderboard 2025 – Vellum AI https://www.vellum.ai/llm-leaderboard

[48] Watch Out for the Pitfalls of AI-Driven Bill Review – Paradigm https://www.paradigmcorp.com/insights/watch-out-for-the-pitfalls-of-ai-driven-bill-review/

[49] Anthropic Claude API: A Practical Guide – Acorn Labs https://www.acorn.io/resources/learning-center/claude-api/

[50] What Is AI Transparency? – Artificial Intelligence – Salesforce https://www.salesforce.com/artificial-intelligence/ai-transparency/

[51] Avoiding Costly Payment Errors: How AI Drives Data Transparency https://optimus.tech/blog/avoiding-costly-payment-errors-ai-driven-solutions-for-data-transparency

[52] How AI Is Reducing Medical Billing Errors and Improving Accuracy https://blog.nym.health/how-ai-is-reducing-medical-billing-errors-and-improving-accuracy

[53] Medical Billing Automation: How AI Reduces Errors and Increases … https://www.enter.health/post/medical-billing-automation-ai-error-reduction

[54] AI for Billing and Refund Automation – EverWorker https://everworker.ai/blog/ai-for-billing-and-refund-automation

Ask questions about this content?

I'm here to help clarify anything