Part I: The Architecture of Concentration

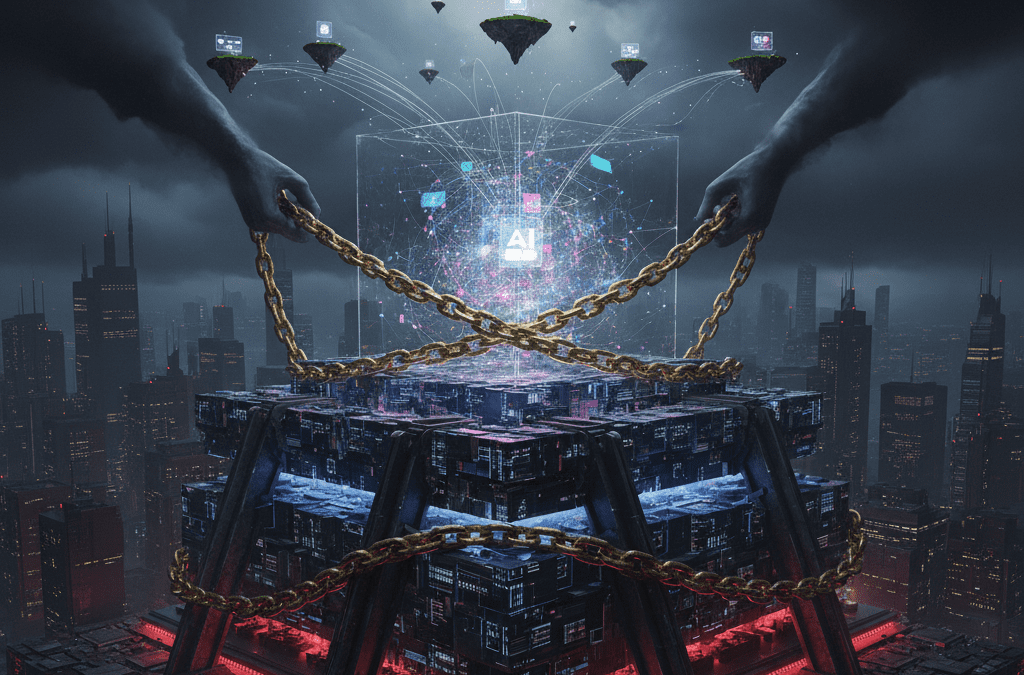

The advent of generative artificial intelligence (AI) represents a technological shift of historic proportions, promising to reshape economies and societies. Yet, beneath the surface of consumer-facing applications like chatbots and image generators lies a complex industrial architecture—the AI stack—that is rapidly consolidating. Unlike previous technological waves, where market power often accumulated over years or decades, the generative AI ecosystem exhibits a powerful, innate pull towards concentration. This tendency is not a market failure but a consequence of its fundamental design, which is characterized by staggering barriers to entry and critical chokepoints at its foundational layers. An analysis of this structure reveals that the AI industry is not a level playing field but a vertically integrated system where control over essential inputs grants a handful of incumbent firms disproportionate power over the entire value chain. This reality demands a new paradigm for competition policy: one that is proactive, structurally aware, and focused on preempting the monopolization of the 21st century’s most critical technology before it becomes an irreversible fact.

Section 1: The New Industrial Stack and Its Chokepoints

To comprehend the dynamics of competition in the AI era, it is essential to move beyond a view of the market as a horizontal collection of competing applications. The AI economy is more accurately understood as a vertically integrated technology stack, a layered hierarchy of interdependent components where power and value are unevenly distributed. Each layer provides a critical input for the layer above it, creating a chain of dependencies that flows from the physical silicon at the base to the user-facing services at the top. It is within this structure, particularly at the foundational layers, that market power is accumulating at an alarming rate, creating chokepoints that threaten to stifle competition across the entire ecosystem.1

Defining the Four-Layer AI Stack

The generative AI value chain can be deconstructed into four distinct but deeply interconnected layers:

- Layer 1: Semiconductors (AI Accelerators): This is the physical foundation of the AI economy. It consists of highly specialized processors, primarily Graphics Processing Units (GPUs), that are optimized for the parallel computations required to train and run large AI models. These chips are not general-purpose but are purpose-built silicon designed for AI workloads, making them an indispensable and non-substitutable input for any serious AI development effort.1

- Layer 2: Cloud Infrastructure: This layer aggregates the specialized hardware from Layer 1 into massive, hyperscale data centers. Cloud service providers offer access to vast clusters of AI accelerators, along with the necessary networking, storage, power, and cooling infrastructure, as a utility service. For all but a handful of the world’s largest technology companies, renting access to this infrastructure is the only feasible way to acquire the computational power needed to train a foundation model.1

- Layer 3: Foundation Models (FMs): These are the massive, pre-trained AI models, such as OpenAI’s GPT series, Anthropic’s Claude models, and Meta’s Llama family, that form the core intelligence of modern AI. Trained on vast datasets at enormous expense, these FMs function as a kind of “operating system” for AI, providing a general-purpose base of knowledge and capabilities that can be adapted (or “fine-tuned”) for a wide range of specific tasks.4

- Layer 4: Applications and Agents: This is the most visible layer, comprising the end-user products and services built atop foundation models. This includes well-known applications like ChatGPT, AI-powered features within existing software (e.g., Microsoft 365 Copilot), and a rapidly growing ecosystem of specialized tools for tasks like coding, marketing, and customer service.5

Concentration at the Foundational Layers

While the application layer may appear vibrant and competitive, a closer examination reveals that competition erodes dramatically as one descends the stack. The most foundational layers, which serve as critical inputs for all subsequent innovation, are characterized by extreme market concentration.

The hardware layer is, for all practical purposes, a monopoly. NVIDIA, through a combination of superior hardware design and a deeply entrenched software ecosystem, has established a commanding position. The company controls an estimated 80% of the market for AI accelerator chips and a staggering 94% of the discrete GPU market.7 This dominance is not merely a function of its hardware; it is cemented by its proprietary CUDA (Compute Unified Device Architecture) software platform. CUDA provides a programming model and a rich set of libraries that make it significantly easier for developers to build and optimize AI models on NVIDIA’s GPUs. Over more than a decade, this software has created a powerful lock-in effect, making the vast ecosystem of AI tools, research, and talent dependent on NVIDIA’s platform. This makes switching to a competitor’s hardware a costly and complex undertaking, effectively turning NVIDIA’s products into the de facto standard for the entire industry.

The cloud infrastructure layer, while not a pure monopoly, is a tight oligopoly controlled by three dominant players. Amazon Web Services (AWS), Microsoft Azure, and Google Cloud collectively command over 60% of the global cloud infrastructure market. AWS, the market leader, holds a 30% share on its own, followed by Azure at 20% and Google Cloud at 13%.3 This concentration gives these “hyperscalers” immense gatekeeper power. They are the primary landlords of the digital infrastructure required for AI development, controlling access to the massive GPU clusters that are the lifeblood of foundation model training. Their market position allows them to set the terms of access and pricing for these essential resources, influencing the competitive landscape for every company operating at the higher layers of the stack.1

Vertical Integration and Inter-Layer Dependencies

The concentration of power at the hardware and cloud layers is not a static phenomenon; it is being actively leveraged to exert influence and control over the more nascent foundation model and application layers. The AI stack is increasingly characterized by vertical integration, where firms dominant at one layer use their market power to gain an advantage in another. This creates a cascade of dependencies where the structure of the lower layers dictates the competitive possibilities of the upper layers.1

This dynamic extends beyond commercial arrangements into the very architecture of the data center. The next generation of AI infrastructure is being built around new technologies like Compute Express Link (CXL) for memory pooling and photonic interconnects for high-speed data transfer. These architectural choices create a form of “infrastructural path dependency.” Once a hyperscaler commits to a specific hardware architecture, every component—from ASICs to memory modules—must be compatible. This creates lock-in not through software licensing, but through the unyielding realities of physics, thermals, and bandwidth ceilings. Switching away from a standardized architecture becomes, as one analysis notes, “architecturally impossible without a full rebuild”.11

This physical and architectural lock-in transforms the AI stack from a mere technical diagram into a political and economic map of control points. The extreme concentration in hardware and cloud infrastructure creates a powerful dependency effect that flows upward. The viability of a new application (Layer 4) is contingent on its access to a foundation model (Layer 3). The development of that foundation model is, in turn, critically dependent on access to massive-scale compute from a cloud provider (Layer 2). And that cloud provider’s ability to offer competitive AI infrastructure is dependent on its access to a steady supply of accelerators from the dominant chipmaker (Layer 1). This chain of dependency means that a monopoly or oligopoly at a lower layer can be used to project power, foreclose competition, and extract rents from all layers above it. Consequently, any antitrust analysis that focuses on a single market in isolation—for example, the “market for chatbots”—is fundamentally incomplete. A holistic, “full-stack” analysis is required to understand how power is being consolidated and leveraged across the entire value chain, a reality that necessitates a more sophisticated and preemptive approach to competition policy.

Section 2: The Insurmountable Barriers: Quantifying the Cost of Entry

The strong tendency toward market concentration in the AI industry is not solely the result of anticompetitive conduct; it is deeply rooted in the economic realities of developing cutting-edge foundation models. The barriers to entry are not merely high; they are, for most independent actors, insurmountable. These barriers form an “iron triangle” of capital, compute, and data, which are not only formidable on their own but are also mutually reinforcing. Together, they create a deep and wide competitive moat that protects the handful of firms at the frontier, making it nearly impossible for new, unaligned entrants to challenge their position.

The Capital Barrier: From Millions to Billions

The most straightforward barrier to entry is the astronomical and exponentially increasing cost of training a state-of-the-art foundation model. This level of capital expenditure fundamentally restricts the field of competition to a small cadre of the world’s most valuable corporations and the startups they finance.

The escalation in training costs has been breathtaking. In 2017, the original Transformer model, which introduced the core architecture of modern LLMs, cost an estimated $900 to train. Just three years later, in 2020, the compute cost for training OpenAI’s GPT-3 (with 175 billion parameters) was estimated to be as high as $4.6 million.12 The next generation of models saw this cost explode by an order of magnitude. Training OpenAI’s GPT-4 reportedly cost well over $100 million, while Google’s Gemini Ultra is estimated to have required $191 million in training compute alone.12

While more recent models have demonstrated some gains in training efficiency—with estimates for GPT-4o at around $10 million and Anthropic’s Claude 3.5 Sonnet at “a few tens of millions” or approximately $30 million—these figures still represent a colossal financial hurdle.14 Moreover, the push toward the absolute frontier of AI capability continues to drive costs upward. Models currently in development are being trained on compute clusters costing an estimated $500 million, and future “GPT-5 scale” models are projected to require investments in the billions.14 This economic reality creates what economists term a “natural monopoly” dynamic, where the fixed costs are so high that the market can only sustain a very small number of producers.4

The Compute Barrier: The NVIDIA Moat and the Price of Power

Capital is the means, but access to specialized computational hardware at an immense scale is the end. Compute is the single greatest bottleneck in the AI value chain, and the market for this critical resource is tightly controlled.

The cost of the underlying hardware is staggering. A single NVIDIA H100 GPU, the industry’s workhorse for AI training, can cost between $30,000 and $40,000 to purchase outright.7 Assembling even a modest mid-sized AI training server with eight NVIDIA A100 GPUs (a slightly older model) can cost upwards of $200,000 for the hardware alone, before accounting for networking, storage, power, and personnel.16

For those who cannot afford to build their own infrastructure, renting access from cloud providers is the only alternative, but this too is prohibitively expensive at scale. The hourly rental cost for a single high-end GPU like the H100 can range from $1.65 on a competitive marketplace to over $12 on a major cloud platform like AWS.17 These per-hour costs quickly accumulate into astronomical sums when considering the scale required for frontier model training. As NVIDIA’s CEO noted, training a large model can require a cluster of 25,000 GPUs running continuously for three to five months.12 The sheer scale of this requirement—both in terms of the number of chips and the duration of the training run—places it far beyond the financial and logistical capabilities of a typical startup or academic institution.

The Data Barrier: A Contested but Critical Moat

Compared to the hard constraints of capital and compute, the role of data as a barrier to entry is more complex and contested. However, it remains a crucial dimension of competitive advantage, particularly for the largest incumbents.

The argument for data as a significant barrier rests on the immense, proprietary datasets controlled by Big Tech platforms. Companies like Google and Microsoft possess unique, real-time data streams from their billions of users—search queries, content interactions, behavioral data—that are impossible for a new entrant to replicate.4 This data is not just voluminous; it is a continuously updated reflection of human interests and intentions, making it invaluable for refining models and training the next generation of AI agents. This advantage is being further solidified through the rise of exclusive licensing deals, where AI developers with deep pockets are paying publishers and content creators for legal access to their archives, effectively walling off high-quality training data from less capitalized rivals.19

Conversely, a compelling counterargument posits that data is not the insurmountable moat it is often claimed to be. A thriving market for both open-source and commercially licensed datasets has emerged, allowing developers to acquire the necessary raw material for training.22 Furthermore, technological advances are diminishing the reliance on massive, proprietary datasets. The development of high-quality synthetic data and the industry’s shift toward smaller, more specialized models trained on curated, high-quality data are eroding the raw scale advantage of incumbents.22 The continued dynamism of the market, with new models from various players consistently leapfrogging one another in performance, suggests that data access, while important, is not currently a decisive barrier to entry.22

A synthesis of these views suggests that while vast quantities of general data for pre-training may be increasingly commoditized, the unique, proprietary, and real-time interaction data held by incumbents provides a durable and difficult-to-replicate advantage. This is particularly true not for initial model training, but for the crucial subsequent stages of fine-tuning, reinforcement learning from human feedback (RLHF), and the development of sophisticated AI agents that learn from continuous user engagement.

These three barriers—capital, compute, and data—do not exist in isolation. They form a self-reinforcing flywheel, an “iron triangle” that creates a powerful centralizing dynamic in the AI market. The logic of this flywheel is inescapable: to train a cutting-edge model on massive Data, one needs access to massive-scale Compute. To acquire that Compute, whether through purchase or rental, one needs immense Capital. This one-way causal chain is completed by the unique advantage of incumbent platforms: the ability to deploy a new model across a pre-existing user base of billions. This massive distribution channel is what allows a firm to effectively monetize its multi-hundred-million-dollar investment, generating the profits—the new Capital—required to reinvest in the next generation of compute and data acquisition, thus restarting the cycle.

Only the largest, most established technology companies—Microsoft, Google, Amazon, Meta—already possess all the necessary components of this flywheel. A brilliant AI startup, even one with world-class talent and novel algorithms, brings only a fraction of what is needed. It lacks the nine-figure capital reserves, the hyperscale cloud infrastructure, and the global distribution platform. Consequently, it is a matter of structural necessity, not mere strategic choice, that these startups are compelled to partner with an incumbent who can provide the missing pieces. This structural reality is the primary engine driving the formation of the “killer collaborations” that are now defining the competitive landscape of the AI industry.

Table 1: Barriers to Entry in Frontier AI Model Development

| Barrier | Key Metrics & Costs | Significance/Impact | ||

| Capital | Training Costs: GPT-3 (~$4.6M), GPT-4 (>$100M), Gemini Ultra (~$191M), Claude 3.5 Sonnet (~$30M).12 | Restricts frontier model development to a handful of the world’s most valuable companies and the startups they fund, creating a de facto barrier for independent innovation. | ||

| Compute | Hardware: NVIDIA H100 GPU cost (~$30,000-$40,000).7 NVIDIA market share (>80% AI accelerators, 94% discrete GPUs).7 | Rental: $1.65 – $12.30 per GPU per hour.17 | Scale: 25,000+ GPUs for months.12 | Creates a critical bottleneck controlled by an effective hardware monopoly (NVIDIA) and a cloud oligopoly (AWS, Azure, Google Cloud), making access to at-scale compute the primary prerequisite for competition. |

| Data | Proprietary Moats: Incumbents leverage vast, real-time user data from core services (e.g., Google Search).15 | Licensing: Exclusive deals for high-quality content archives are emerging.19 | Scale: Models are trained on trillions of tokens. | While general data is increasingly available, proprietary interaction data provides a durable advantage for model refinement and agent development. High costs of licensing quality data further advantage well-capitalized firms. |

Part II: The Mechanics of Market Consolidation

The structural conditions predisposing the AI market to concentration have been swiftly capitalized upon through a series of strategic alliances that are reshaping the competitive landscape. These are not traditional arm’s-length commercial agreements but deep, multi-billion-dollar partnerships that effectively fuse the innovative dynamism of leading AI startups with the immense infrastructural power of incumbent technology giants. This section examines these “alliances of power” as the primary mechanism of market consolidation, arguing that they function as de facto vertical mergers that achieve anticompetitive effects while often evading the full scope of traditional regulatory review. This emerging pattern of consolidation has not gone unnoticed, prompting a global regulatory awakening as competition authorities from the United States, the United Kingdom, and the European Union begin to scrutinize these deals and formulate a new playbook for the AI era.

Section 3: Alliances of Power: Big Tech Partnerships as De Facto Mergers

In the nascent AI market, the most significant competitive moves are not outright acquisitions but a new form of strategic entanglement: deep, exclusive, and financially massive partnerships. These collaborations allow incumbent cloud providers to co-opt the most promising AI innovators, tying their future development to proprietary infrastructure and neutralizing them as potential long-term, independent threats. An analysis of the three pivotal partnerships—Microsoft-OpenAI, Google-Anthropic, and Amazon-Anthropic—reveals a consistent pattern of “soft” vertical integration that is rapidly consolidating control over the AI stack.

Case Study 1: Microsoft & OpenAI – The Trailblazing Alliance

The partnership between Microsoft and OpenAI has been the defining alliance of the generative AI era, setting the template for subsequent deals. It is a multi-faceted collaboration that goes far beyond a simple investment.

- Structure of the Deal: Microsoft has committed over $13 billion to OpenAI, a sum delivered not just in cash but primarily in the form of massive-scale cloud computing credits on its Azure platform.23 This arrangement is not a standard equity investment; it is a complex profit-sharing agreement that entitles Microsoft to a significant portion (reportedly 75% initially, then 49%) of OpenAI’s future profits until its investment is repaid, up to a mutually agreed-upon cap.26 Crucially, the deal grants Microsoft deep, preferential access to OpenAI’s models, which it has integrated across its entire product ecosystem.

- Strategic Rationale: The symbiosis is clear. For OpenAI, the partnership provided the two resources it needed most and could not acquire on its own: the immense capital and the hyperscale, purpose-built supercomputing infrastructure required to train successive generations of frontier models like GPT-4.24 For Microsoft, the deal was a strategic masterstroke. It provided an immediate, first-mover advantage in the generative AI race, allowing it to leapfrog competitors by embedding the world’s most advanced AI models into its core businesses, from the Azure OpenAI Service for developers to the Microsoft 365 Copilot for enterprise users.23 This strategy mirrors Microsoft’s historical playbook of leveraging partnerships and acquisitions to establish dominance in critical new layers of technology infrastructure, a pattern seen in its successful build-out of the Azure cloud platform in the 2010s.23

- Competitive Effects: The alliance has profoundly reshaped the market. It has effectively tied the world’s leading AI research lab to the world’s second-largest cloud provider. This has created a powerful flywheel, where the allure of OpenAI’s models drives customers to Azure, in turn fueling Azure’s growth and providing Microsoft with the revenue to continue funding OpenAI’s research. This is borne out in the financial data: in one quarter, AI services were credited with contributing 12 percentage points to Azure’s impressive 33% revenue growth.24 The result is a tightly integrated ecosystem that is exceedingly difficult for competitors to challenge, as it combines best-in-class models with a global distribution and infrastructure platform.

Case Study 2: Google & Anthropic – The Incumbent’s Response

Facing the formidable Microsoft-OpenAI alliance, Google moved to secure its own high-profile AI partner, forming a deep collaboration with Anthropic, a prominent AI safety and research company founded by former senior members of OpenAI.

- Structure of the Deal: Google has invested over $2 billion in Anthropic and has established a long-term cloud partnership.29 Under this agreement, Anthropic has designated Google Cloud as a “preferred cloud provider” and utilizes Google’s specialized, custom-designed hardware—Tensor Processing Units (TPUs) and GPU clusters—for training and deploying its advanced models, including the Claude family.32

- Strategic Rationale: For Google, this partnership is a crucial defensive and offensive move. Defensively, it prevents Microsoft’s Azure from becoming the sole cloud destination for developers seeking to build on cutting-edge foundation models. By offering Anthropic’s highly capable Claude models on its platform, Google provides a compelling alternative to OpenAI’s GPT series.34 Offensively, it gives Google a significant stake in a key innovator with a strong research pedigree, diversifying its AI portfolio beyond its own in-house efforts (e.g., Gemini).

- Competitive Effects: The partnership creates powerful incentives for both parties to deepen their integration. Anthropic is incentivized to optimize its models to run most efficiently on Google’s unique hardware (TPUs), while the thousands of businesses already using Google Cloud are given a seamless, integrated path to adopt Anthropic’s models through platforms like Vertex AI.33 This dynamic risks foreclosing competition by steering a significant portion of the AI development market toward Google’s infrastructure.

Case Study 3: Amazon & Anthropic – The Cloud Leader’s Countermove

As the undisputed leader in the cloud infrastructure market, Amazon Web Services (AWS) could not afford to be left without a top-tier AI partner. Its response was a massive investment in Anthropic, creating a complex dynamic where Anthropic is now deeply partnered with two of the three major cloud providers.

- Structure of the Deal: Amazon has committed to investing up to $8 billion in Anthropic, making it the startup’s largest known backer.29 The deal designates AWS as Anthropic’s “primary cloud provider” for mission-critical workloads like model development and safety research. A key and strategically significant component of the agreement is Anthropic’s commitment to use Amazon’s proprietary, custom-designed AI chips—Trainium for training and Inferentia for inference—to build and deploy its future models.35

- Strategic Rationale: The partnership is designed to defend AWS’s market leadership in the cloud by ensuring it remains a central hub for generative AI development. By securing Anthropic, AWS can offer its vast customer base access to another leading family of foundation models via its Amazon Bedrock service.37 The commitment to use Amazon’s custom silicon is a longer-term strategic play. By creating a powerful customer for its own chips, Amazon aims to build a viable alternative to NVIDIA’s hardware, potentially creating a fully integrated, end-to-end Amazon AI stack from the silicon up.

- Competitive Effects: This alliance represents a clear and potent example of vertical integration. It ties a leading model developer (Layer 3) to the dominant cloud platform (Layer 2) and a nascent custom hardware ecosystem (Layer 1). This creates a powerful incentive for AWS’s millions of customers to adopt Anthropic’s models, which will be optimized for and deeply integrated with the AWS environment. This risks foreclosing opportunities for other model developers who lack such a powerful distribution channel and for other cloud providers who cannot offer the same level of integration with Anthropic’s technology.

These partnerships are not merely large investments; they represent a novel and highly effective strategy for market consolidation. They function as what regulators have begun to term “killer collaborations”.38 The term “killer acquisition” traditionally refers to an incumbent buying a nascent competitor simply to discontinue its product and eliminate a future threat. A “killer collaboration” is a more sophisticated evolution of this concept. Instead of acquiring and shutting down the innovator, the incumbent platform invests heavily, becoming an indispensable partner for capital and infrastructure. This deep integration allows the incumbent to absorb the startup’s innovative potential, steer its technological roadmap to align with its own strategic interests (e.g., driving cloud consumption, promoting proprietary hardware), and prevent it from ever becoming a truly independent, disruptive force that could challenge the incumbent’s core business.

Ultimately, these deals achieve the primary competitive effects of a vertical merger—foreclosing rivals from key inputs and customers, raising barriers to entry for new players, and entrenching the market power of the incumbent—but they often do so through a series of investments and commercial agreements that may not trigger traditional, size-based merger review thresholds. This necessitates a new analytical framework from antitrust enforcers, one that looks beyond the form of the transaction to its substantive effect on the structure of the market.

Table 2: Analysis of Major Strategic AI Partnerships

| Microsoft & OpenAI | Google & Anthropic | Amazon & Anthropic | |

| Total Investment | > $13 Billion 23 | > $2 Billion 29 | Up to $8 Billion 29 |

| Key Cloud/Hardware Terms | Exclusive/preferred use of Microsoft Azure for training and inference.27 | Preferred use of Google Cloud; utilization of custom Google TPU/GPU clusters.32 | Designated AWS as “primary cloud provider”; commitment to use custom AWS Trainium & Inferentia chips.35 |

| Strategic Goal for Incumbent | Integrate cutting-edge AI across entire product stack; drive Azure growth and establish a first-mover advantage.23 | Secure a top-tier AI partner to compete with the Azure-OpenAI alliance; promote Google’s cloud and custom hardware.31 | Defend AWS’s cloud market leadership; create a customer for and validate its proprietary AI silicon to challenge NVIDIA.35 |

| Status of Regulatory Review | Under formal investigation by the UK’s CMA, the European Commission, and the US FTC.5 | Under preliminary investigation by the UK’s CMA.5 | Under investigation by the UK’s CMA and the US FTC.5 |

Section 4: A Global Regulatory Awakening

The rapid consolidation of the AI value chain through these strategic partnerships has not gone unnoticed by competition authorities around the world. In a marked departure from previous technological shifts, where regulatory action often lagged years behind market developments, enforcers in key jurisdictions are taking a distinctly proactive and preemptive stance. A clear international consensus is emerging that the unique structure of the AI market and the speed of its development necessitate early and vigorous scrutiny to prevent the entrenchment of monopoly power. This global regulatory awakening is characterized by a shared set of concerns and a coordinated effort to develop new analytical tools and enforcement strategies tailored to the AI era.

The UK’s Proactive Stance (CMA)

The United Kingdom’s Competition and Markets Authority (CMA) has positioned itself at the forefront of this global effort. It was one of the first major regulators to launch a comprehensive review of the AI foundation model market, publishing an initial report in September 2023, followed by a more detailed “Update Paper” in April 2024.5

The CMA’s core concern is that the AI sector is developing in ways that risk “negative market outcomes”.39 The agency has identified a set of “interconnected risks” where a small number of incumbent firms could leverage their existing power over key inputs—specifically compute, data, and technical expertise—to entrench their positions and foreclose competition.5 The CMA is particularly vigilant about how partnerships and vertical integration could allow dominant players to control essential resources, thereby restricting access for smaller firms and hindering the development of a competitive FM market.

Reflecting these concerns, the CMA has moved from analysis to action. It has opened formal investigations into the pivotal partnerships that define the industry, including Microsoft’s relationship with OpenAI and Amazon’s investment in Anthropic. It has also launched a preliminary inquiry into the Google-Anthropic partnership.5 This proactive scrutiny, occurring at the very formation stage of the market, signals the CMA’s intent to apply competition law before markets have irrevocably “tipped.”

U.S. Enforcement (DOJ & FTC)

In the United States, the Department of Justice (DOJ) and the Federal Trade Commission (FTC) have made it clear that they intend to apply existing antitrust laws vigorously to the AI sector, rejecting any notion that novel technologies are exempt from scrutiny.

A landmark joint statement issued by the FTC, DOJ, Consumer Financial Protection Bureau (CFPB), and Equal Employment Opportunity Commission (EEOC) served as a powerful declaration of intent. The agencies affirmed that established legal principles governing fair competition, consumer protection, and civil rights apply fully to the development and deployment of automated systems. The statement explicitly warned against the potential for AI to “perpetuate unlawful bias, automate unlawful discrimination, and produce other harmful outcomes,” signaling a broad enforcement mandate that encompasses both economic and social harms.41

The agencies’ focus is twofold. First, they are deeply concerned with how control over essential inputs—data, talent, and computational resources—can be used to create unlawful barriers to entry, enabling dominant firms to engage in exclusionary conduct such as bundling, tying, and exclusive dealing.42 Second, they are actively investigating the potential for AI and algorithms to facilitate new forms of collusion. This includes concerns about algorithmic price-fixing, where competitors use shared pricing tools to coordinate rent increases, as alleged in the DOJ’s lawsuit against property management software company RealPage.38 To address these novel challenges, the DOJ has updated its corporate compliance guidance to specifically require companies to assess and mitigate the antitrust risks associated with their use of AI.40

The European Union’s Dual Approach (Regulation & Competition)

The European Union is pursuing a comprehensive, two-pronged strategy to govern the AI market, combining a groundbreaking, forward-looking regulatory framework with the robust application of traditional competition law.

The centerpiece of its regulatory effort is the EU AI Act, the world’s first comprehensive, binding legal framework for artificial intelligence. The Act establishes a risk-based approach, imposing strict obligations on “high-risk” AI systems related to risk assessment, data quality, transparency, human oversight, and robustness.44 While its primary focus is on safety, security, and the protection of fundamental rights, the Act’s stringent requirements will have significant competitive implications. By mandating high standards for data governance and transparency, the AI Act may help level the playing field between incumbents with vast, opaque datasets and new entrants committed to more responsible development practices.

Alongside this new regulatory regime, the European Commission’s competition directorate (DG-COMP) is actively applying its existing antitrust powers. The Commission is formally investigating the major Big Tech partnerships and has launched a broader inquiry into the competitive dynamics in generative AI markets.45 European regulators have expressed concerns that are closely aligned with their UK and US counterparts, focusing on how “gatekeeper” platforms might abuse their dominance to foreclose AI rivals. Notably, the EU has shown a strong historical willingness to consider interoperability mandates as a remedy in technology markets, a tool that may be deployed to address concerns about lock-in within emerging AI ecosystems.40

The most striking feature of this global regulatory activity is its preemptive and convergent nature. Unlike with previous technology waves, such as the rise of search engines, social media, or mobile app stores—where antitrust enforcement was largely reactive and often commenced years after a market leader had established an unassailable position—regulators are intervening during the market’s formative stages. The historical antitrust cases against IBM and Microsoft, for instance, were initiated long after those firms had cemented their dominance in mainframes and PC operating systems, respectively.48

Today’s actions are fundamentally different. The CMA, FTC, and European Commission are scrutinizing partnerships and market structures as they are being constructed, not a decade after the fact. This represents a significant paradigm shift in technology antitrust, reflecting a hard-won lesson from the past two decades: waiting for competitive harm to become obvious and undeniable often means it is too late for any remedy to be effective. This emerging international consensus—that a proactive, forward-looking competition policy is essential for the AI sector—provides a powerful political and intellectual foundation for the argument that new, structurally-minded interventions are not only justified but necessary.

Part III: A Proactive Antitrust Framework for the AI Era

The structural realities of the AI stack and the emergent mechanics of its consolidation demand a competition policy that is as innovative as the technology it seeks to govern. A reactive approach, focused on punishing anticompetitive conduct after the fact, is destined to fail in a market that is consolidating in real time. What is needed is a proactive framework that addresses the root causes of market concentration by reshaping the architecture of the market itself. This section makes the prescriptive case for such a framework, drawing critical lessons from the landmark antitrust cases of the 20th-century computing era to build a robust justification for structural remedies. It then articulates the central policy recommendation: a mandate for multi-layer interoperability, modeled on the open, permissionless architecture of the internet, designed to dismantle walled gardens and ensure that the AI economy develops as a competitive and dynamic ecosystem.

Section 5: Lessons from a Pre-Digital Age: The Ghosts of U.S. v. IBM and U.S. v. Microsoft

To design an effective antitrust playbook for the future, it is essential to learn from the past. The two most significant technology monopolization cases of the 20th century, those against IBM and Microsoft, offer enduring lessons about the nature of power in integrated technology markets and the unique efficacy of remedies that promote openness and interoperability. A comparative analysis of these historical precedents reveals that in markets characterized by strong network effects and technical integration, the most potent interventions are not behavioral fines but structural remedies that pry open chokepoints and enable new forms of competition.

U.S. v. IBM (1969-1982): The Power of Unbundling

In 1969, the U.S. Department of Justice filed a monumental antitrust suit against IBM, alleging that the company had illegally monopolized the market for mainframe computers.48 At the time, IBM’s business model was one of complete vertical integration; it sold customers a single, bundled package that included hardware, the operating system, application software, and support services. This bundling strategy made it nearly impossible for independent companies to compete in the nascent software market, as customers received all necessary software as part of their IBM hardware lease.50

The case dragged on for 13 years and was ultimately dropped by the government in 1982, partly because the technological landscape had begun to shift from mainframes to personal computers.50 However, the case’s most profound impact came not from a final verdict, but from a strategic decision IBM made under the immense pressure of the investigation. In 1969, shortly after the suit was filed, IBM voluntarily chose to “unbundle” its software and services, pricing and selling them separately from its hardware.48

This decision is widely credited as the catalyst that created the modern independent software industry. By unbundling, IBM inadvertently opened up a competitive space. New companies could now emerge to write and sell software that ran on IBM’s dominant hardware platform, competing on the merits of their products rather than being locked out by IBM’s integrated offering. The lesson for the AI era is profound: forcing a dominant firm to unbundle the integrated layers of its technology stack can be a powerful pro-competitive move, capable of unleashing a wave of innovation in the newly opened downstream markets. It demonstrates that breaking the technical and commercial ties between different layers of a stack can be more effective than breaking up the company itself.

U.S. v. Microsoft (1998-2001): The Power of Mandated API Access

Three decades later, the government confronted a similar dynamic in a new technological context. In 1998, the DOJ and 20 states sued Microsoft, alleging it had abused its monopoly over the PC operating system market (Windows) to crush a nascent competitive threat from the web browser market.49 Microsoft had illegally bundled its Internet Explorer (IE) browser with every copy of Windows, leveraging its OS dominance to foreclose competition from the then-leading browser, Netscape Navigator.49

The district court initially found Microsoft liable and ordered the company to be broken into two separate entities—one for the operating system and one for applications. While this structural remedy was overturned on appeal, the final settlement contained a crucial, albeit weaker, structural component: it required Microsoft to expose its Application Programming Interfaces (APIs) to third-party developers.49 APIs are the technical interfaces that allow different pieces of software to communicate with each other. By mandating that Microsoft provide documentation and access to the critical APIs that connected applications to the Windows operating system, the remedy aimed to create a more level playing field. It ensured that rival software (including competing browsers and middleware) could interoperate more effectively with the dominant platform, thereby reducing Microsoft’s ability to use its control over Windows to disadvantage competitors in adjacent markets.

The lesson from the Microsoft case is that mandated interoperability is a potent and viable antitrust remedy. It established the principle that a dominant platform can be compelled to open its interfaces as a means of restoring competition. This approach offers a more targeted and less disruptive alternative to a full corporate breakup; one does not need to dismantle the company to dismantle its monopoly power. Instead, one can force it to open the critical gateways that connect its dominant platform to the broader ecosystem, allowing competition to flourish on its periphery.

The common thread running through the IBM and Microsoft cases is the recognition that structural problems require structural solutions. In technology markets defined by platform dominance, network effects, and deep technical integration, purely behavioral remedies—which essentially amount to a court order to “stop behaving badly”—are often insufficient. Such remedies are difficult to monitor and easy to circumvent. The enduring impact of both landmark cases came from remedies that altered the fundamental architecture of the market itself. IBM’s unbundling and Microsoft’s mandated API access were both forms of compelled openness.54 They did not just penalize past conduct; they changed the rules of engagement for the future, creating new possibilities for competition.

This historical evidence provides a powerful justification for applying a similar philosophy to the AI stack. The concern today—that dominant firms are leveraging their control over foundational layers like cloud infrastructure to foreclose competition in higher layers like foundation models—is a direct echo of the concerns in the mainframe and PC eras. The lesson from history is clear: the most effective response is not to micromanage corporate behavior but to mandate interoperability at the critical interfaces between the layers of the stack. This approach represents a modern, surgically precise form of a structural remedy, tailored to the realities of a deeply integrated digital economy.

Section 6: Engineering Competition: A Mandate for Multi-Layer Interoperability

The central prescriptive argument of this analysis is that an effective competition policy for the AI era must be proactive and architectural. It must move beyond punishing anticompetitive outcomes and instead focus on engineering a market structure that fosters competition by design. The most powerful tool for achieving this is a mandate for interoperability across the critical layers of the AI stack. By requiring dominant firms to open the interfaces to their walled gardens, such a policy can lower barriers to entry, reduce switching costs, and shift the basis of competition from lock-in to innovation. The successful, decentralized development of the open internet provides a compelling historical precedent for this approach.

The Guiding Precedent: The Open Architecture of the Internet

The internet’s remarkable history of explosive growth and permissionless innovation was not an accident; it was the direct result of a series of deliberate design choices made by its early architects. The core of the internet was built on a suite of communication protocols—most notably the Transmission Control Protocol/Internet Protocol (TCP/IP)—that were intentionally designed to be open, non-proprietary, and interoperable.57

This open architecture had profound competitive implications. It ensured that no single company or entity could own or control the network itself. Any computer that could “speak” the common language of TCP/IP could connect to the global network, and any developer could build new applications and services on top of these shared protocols without needing to ask for permission from a central gatekeeper.60 This created an incredibly fertile ground for innovation, leading to the development of everything from the World Wide Web to email and streaming video. This stands in stark contrast to the closed, proprietary ecosystems that are currently forming within the AI stack, where access to foundational layers is mediated by a small number of corporate gatekeepers. The AI industry is now at a critical juncture, facing a choice between replicating the open, interoperable model of the internet or succumbing to the closed, walled-garden model of previous technology monopolies.

A Proposed Multi-Layer Interoperability Framework

A successful interoperability mandate must be targeted and specific, addressing the unique chokepoints and competitive dynamics at each critical layer of the AI stack. Such a framework would not be a one-size-fits-all solution but a series of tailored interventions designed to pry open the most significant barriers to competition.

- Layer 2 (Cloud Platform): Mandating Fair Access and Data Portability

- The Problem: Dominant cloud providers can leverage their market power to disadvantage competitors. They can create “vendor lock-in” through high data egress fees and technical incompatibilities that make it difficult and expensive for customers to switch to a rival cloud or adopt a multi-cloud strategy.1 They may also discriminate in how they provide access to scarce, high-performance AI hardware.

- The Mandate: An effective remedy at this layer has two components. First, regulators should implement non-discrimination rules, requiring dominant cloud providers to offer access to critical AI resources, particularly advanced GPU clusters, on fair, reasonable, and non-discriminatory (FRAND) terms to all customers, including competing AI model developers.1 Second, regulators must enforce robust data and workload portability standards. This would eliminate punitive data transfer fees and promote the use of open formats (like JSON, XML, and Parquet), allowing enterprise customers to move their data and AI workloads between different cloud providers seamlessly and with minimal cost.61

- Layer 3 (Foundation Model): Mandating Model and Agent Interoperability

- The Problem: The foundation model layer is becoming another point of lock-in. Each major AI provider (OpenAI, Anthropic, Google) has its own proprietary API, prompt structure, and set of functionalities. This forces application developers to build their products for a specific model ecosystem, making it difficult to switch to a different model provider or use multiple models from different providers in a single workflow.63

- The Mandate: Regulators should convene standards bodies to develop and mandate open standards for interacting with foundation models. This would involve creating a universal, standardized API for core model functions like text generation and analysis. It would also require standardizing prompt formats and normalizing the structure of model outputs, so that an application could send the same request to models from different providers and receive a predictably structured response.63 The development of industry-wide protocols like the Model Context Protocol (MCP) for AI-to-tool interactions and the Agent Communication Protocol (ACP) for agent-to-agent communication provides a clear technical path toward achieving this goal.64

- Layer 4 (Application): Ensuring a Level Playing Field

- The Problem: Vertically integrated companies that own both a dominant foundation model and a suite of downstream applications have a powerful incentive and ability to engage in “self-preferencing.” They can give their own applications (e.g., Microsoft 365 Copilot) preferential access to the newest model features, private APIs, or user data, placing third-party applications that rely on the same underlying model at a significant competitive disadvantage.1

- The Mandate: Regulators must enforce strict anti-self-preferencing rules. These rules would prohibit a platform owner from using its control over the foundation model to benefit its own applications in ways that are not available to all other developers on the platform. This ensures that all applications compete on a level playing field, based on the quality of their user experience and features, not on privileged access to the underlying platform.1

Mandating interoperability is not a form of punishment or heavy-handed regulation; it is a sophisticated, market-shaping tool designed to reorient the very basis of competition. In the current paradigm, the primary incentive for a dominant firm is to create and reinforce lock-in. The goal is to build the most inescapable “walled garden” by using proprietary APIs, deep technical integrations, and bundled services to make it as difficult as possible for customers and developers to leave.23

An interoperability mandate fundamentally disrupts this logic. If an application developer can seamlessly switch API calls between a model hosted on Azure, one on Google Cloud, and a self-hosted open-source alternative, the developer is no longer locked into any single ecosystem. The basis of competition is transformed. Cloud providers can no longer compete primarily on their ability to create lock-in; they must compete on the merits of their offerings—the raw performance of their models, the price of their services, the quality of their developer tools, and the reliability of their infrastructure.

This shift has profound, pro-competitive consequences. It dramatically lowers the barriers to entry for new foundation model developers, as they can now “plug into” a vast, pre-existing ecosystem of applications without needing to build a corresponding ecosystem from scratch. It also significantly increases the bargaining power of application developers and enterprise customers, who are empowered to “mix and match,” choosing the best and most cost-effective model for each specific task rather than being confined to the offerings of their chosen cloud provider. Ultimately, interoperability fosters a more dynamic, innovative, and resilient market by ensuring that competition occurs on the merits at each layer of the stack, rather than being predetermined by control of a chokepoint at a lower layer.

Table 3: A Proposed Multi-Layer Interoperability Framework

| AI Stack Layer | Problem | Proposed Mandate | Desired Outcome | |

| Cloud Infrastructure | Vendor Lock-in, High Switching Costs, Discriminatory Access to Hardware.1 | Data & Workload Portability: Mandate open standards and eliminate punitive egress fees.61 | FRAND Access: Require fair, reasonable, and non-discriminatory access to critical compute resources.1 | Increased competition among cloud providers, lower costs for customers, and fair access to essential hardware for all AI developers. |

| Foundation Models | Model Ecosystem Lock-in via Proprietary APIs and Formats.63 | Standardized Model APIs: Develop and mandate open, universal standards for interacting with foundation models and AI agents (e.g., based on MCP/ACP).63 | Enables developers to easily switch between or combine models from different providers (“mix-and-match”), fostering greater innovation and price competition among model developers. | |

| Applications | Self-Preferencing and Unfair Competition by Vertically Integrated Platforms.1 | Strict Anti-Self-Preferencing Rules: Prohibit platform owners from giving their own applications preferential access to model capabilities, data, or integrations not available to third parties.1 | Ensures a level playing field for all application developers, allowing them to compete on the merits of their products rather than on privileged access to the underlying platform. |

Section 7: Conclusion: The New Trustbusters’ Playbook

The evidence and analysis presented in this report converge on a clear and urgent conclusion: the generative AI market is on a trajectory toward dangerous levels of concentration. This is not a distant or speculative threat but an active process of consolidation, driven by the inherent structure of the AI stack and accelerated by the strategic actions of a few incumbent firms. The formidable barriers to entry in capital and compute create a natural tendency toward monopoly, a tendency that is being exploited through a series of “killer collaborations” that function as de facto vertical mergers, tying the most innovative AI startups to the dominant cloud platforms. The historical precedents of the IBM and Microsoft antitrust cases provide a crucial lesson: in structurally concentrated technology markets, the most effective and durable remedies are those that pry open technical chokepoints and mandate interoperability, thereby reintroducing the potential for competition.

This analysis leads to a clear, dual-pronged policy prescription for the new trustbusters of the AI era. First, there must be intense and preemptive scrutiny of the partnerships and investments between dominant technology platforms and leading AI developers. These collaborations must be analyzed not as simple financial transactions but for their structural impact on the market, with a strong presumption against deals that deepen dependencies and foreclose competition. This addresses the flow of consolidation. Second, and more fundamentally, regulators must implement a proactive mandate for multi-layer interoperability. This addresses the stock of existing and accumulating market power by dismantling the technical and commercial walls that create lock-in. These two strategies are complementary and mutually reinforcing; scrutinizing partnerships slows the construction of new walled gardens, while mandating interoperability begins the process of tearing down the walls of existing ones.

The stakes of this policy debate extend far beyond questions of economic efficiency or consumer prices. The concentration of control over a foundational technology like artificial intelligence is a matter of profound social and political significance. An AI economy dominated by a handful of unaccountable gatekeepers would not only stifle the pace of innovation and lead to higher costs, but it would also concentrate an unprecedented degree of power to shape public discourse, influence economic outcomes, and automate societal functions.1 As AI systems become increasingly integrated into the core of our economic and social lives, ensuring that this power remains decentralized and subject to competitive pressures is a democratic imperative.15 A failure to act risks allowing the generative AI revolution to become an engine for greater inequality, entrenching the power of a few firms at the expense of broad-based prosperity.

Therefore, the task for the new trustbusters is not merely to police the market for illegal conduct but to actively shape its architecture for competition. It requires a shift in mindset from a reactive, legalistic approach to a proactive, engineering-oriented one. The goal is to ensure that the foundational technological infrastructure of the 21st century is built on principles of openness, fairness, and interoperability. By embracing this new playbook, policymakers can ensure that the transformative potential of artificial intelligence is harnessed not to reinforce monopoly, but to unleash a new era of permissionless innovation and widely shared growth.

Works cited

- Understanding market concentration in the AI supply chain …, accessed September 7, 2025, https://equitablegrowth.org/understanding-market-concentration-in-the-ai-supply-chain/

- Reducing the Barriers to Entry for Foundation Model Training – arXiv, accessed September 7, 2025, https://arxiv.org/html/2404.08811v1

- Chart: The Big Three Stay Ahead in Ever-Growing Cloud Market …, accessed September 7, 2025, https://www.statista.com/chart/18819/worldwide-market-share-of-leading-cloud-infrastructure-service-providers/

- Market concentration implications of foundation models: The Invisible Hand of ChatGPT, accessed September 7, 2025, https://www.brookings.edu/articles/market-concentration-implications-of-foundation-models-the-invisible-hand-of-chatgpt/

- The UK CMA’s review of AI Foundation Models | Global law firm – Norton Rose Fulbright, accessed September 7, 2025, https://www.nortonrosefulbright.com/en/knowledge/publications/8ca8f277/the-uk-cmas-ai-foundation-models-initial-report

- 3 ways AI is changing how people shop, marketers work and stacks evolve | MarTech, accessed September 7, 2025, https://martech.org/3-ways-ai-is-changing-how-people-shop-marketers-work-and-stacks-evolve/

- The AI Chip Market Explosion: Key Stats on Nvidia, AMD, and Intel’s AI Dominance, accessed September 7, 2025, https://patentpc.com/blog/the-ai-chip-market-explosion-key-stats-on-nvidia-amd-and-intels-ai-dominance

- NVIDIA Discrete GPU Market Share Dominance Expands to 94%, Notes Report, accessed September 7, 2025, https://www.techpowerup.com/340614/nvidia-discrete-gpu-market-share-dominance-expands-to-94-notes-report

- The AI Chip Market Explosion: Key Stats on Nvidia, AMD, and Intel’s …, accessed September 7, 2025, https://patentpc.com/blog/the-ai-chip-market-explosion-key-stats-on-nvidia-amd-and-intels-ai-dominance/

- CMA concludes UK cloud market investigation – Data Centre Review, accessed September 7, 2025, https://datacentrereview.com/2025/08/aws-microsoft-to-face-competition-probe-as-cma-concludes-uk-cloud-market-investigation/

- The New AI Infrastructure Stack. An Investment Analysis of the Next Wave… – Devansh, accessed September 7, 2025, https://machine-learning-made-simple.medium.com/the-new-ai-infrastructure-stack-a6e211ab950f

- What is the cost of training large language models? – CUDO Compute, accessed September 7, 2025, https://www.cudocompute.com/blog/what-is-the-cost-of-training-large-language-models

- What is the cost of training large language models? – CUDO Compute, accessed September 7, 2025, https://www.cudocompute.com/blog/what-is-the-cost-of-training-large-language-models/

- Big misconceptions of training costs for Deepseek and OpenAI : r/singularity – Reddit, accessed September 7, 2025, https://www.reddit.com/r/singularity/comments/1id60qi/big_misconceptions_of_training_costs_for_deepseek/

- AI monopolies – Economic Policy, accessed September 7, 2025, https://www.economic-policy.org/79th-economic-policy-panel/ai-monopolies/

- GPU Cluster Pricing: How Much Does Building One Really Cost? – Cyfuture Cloud, accessed September 7, 2025, https://cyfuture.cloud/kb/gpu/gpu-cluster-pricing-how-much-does-building-one-really-cost

- Rent GPUs | Vast.ai, accessed September 7, 2025, https://vast.ai/

- The importance of proprietary data sets in the context of AI. – Entopy, accessed September 7, 2025, http://www.entopy.com/the-importance-of-proprietary-data-sets-in-the-context-of-ai/

- Generative AI Licensing Agreement Tracker – Ithaka S+R, accessed September 7, 2025, https://sr.ithaka.org/our-work/generative-ai-licensing-agreement-tracker/

- Licensing research content via agreements that authorize uses of artificial intelligence, accessed September 7, 2025, https://www.authorsalliance.org/2024/01/10/licensing-research-content-via-agreements-that-authorize-uses-of-artificial-intelligence/

- Friendly reminder that the “license to train AI models” argument won’t prevent the development of this tech (as some folks hope), but rather it will only help the powerful who own enough data to train AI foundation models. The tech will still be developed and artists still won’t be compensated : r/ – Reddit, accessed September 7, 2025, https://www.reddit.com/r/aiwars/comments/1fx9xvl/friendly_reminder_that_the_license_to_train_ai/

- Is Data Really a Barrier to Entry? Rethinking Competition Regulation in Generative AI, accessed September 7, 2025, https://www.mercatus.org/research/working-papers/data-really-barrier-entry-rethinking-competition-regulation-generative-ai

- Microsoft’s AI Push Beyond OpenAI Could Drive Next Breakout – Nasdaq, accessed September 7, 2025, https://www.nasdaq.com/articles/microsofts-ai-push-beyond-openai-could-drive-next-breakout

- Microsoft’s financial disclosures show how OpenAI is fueling growth — and taking a toll on profits – GeekWire, accessed September 7, 2025, https://www.geekwire.com/2024/microsofts-financial-disclosures-show-how-openai-is-fueling-growth-and-taking-a-toll-on-profits/

- Microsoft: AI Push Beyond OpenAI Could Drive Next Breakout …, accessed September 7, 2025, https://www.investing.com/analysis/microsoft-ai-push-beyond-openai-could-drive-next-breakout-200666461

- How Much Is Microsoft’s Investment in OpenAI Worth? By: Jon Tang, Managing director – Empire Valuation Consultants, accessed September 7, 2025, https://empireval.com/how-much-is-microsofts-investment-in-openai-worth-by-jon-tang-managing-director/

- Wall Street analysts claim Microsoft’s $13 billion OpenAI investment is “some of the best money ever spent” despite bankruptcy reports — Here’s why – Windows Central, accessed September 7, 2025, https://www.windowscentral.com/microsoft/analysts-claim-microsofts-openai-investment-is-best-money-spent

- Microsoft’s Strategic Investment in OpenAI | FDTec, accessed September 7, 2025, https://fdtec.co/microsofts-strategic-investment-in-openai/

- Anthropic – Wikipedia, accessed September 7, 2025, https://en.wikipedia.org/wiki/Anthropic

- UK regulator looks at Google’s partnership with Anthropic | Google …, accessed September 7, 2025, https://www.theguardian.com/technology/article/2024/jul/30/google-anthropic-partnership-cma-ai

- Google Deepens Anthropic Partnership With New $1 Billion Investment – PYMNTS.com, accessed September 7, 2025, https://www.pymnts.com/artificial-intelligence-2/2025/google-deepens-anthropic-partnership-with-new-1-billion-investment/

- Anthropic Partners with Google Cloud, accessed September 7, 2025, https://www.anthropic.com/news/anthropic-partners-with-google-cloud

- Anthropic Forges Partnership With Google Cloud to Help Deliver Reliable and Responsible AI – Feb 3, 2023, accessed September 7, 2025, https://www.googlecloudpresscorner.com/2023-02-03-Anthropic-Forges-Partnership-With-Google-Cloud-to-Help-Deliver-Reliable-and-Responsible-AI

- Try Anthropic’s Claude models on Google Cloud’s Vertex AI, accessed September 7, 2025, https://cloud.google.com/products/model-garden/claude

- AI Investment: Amazon’s Investment in Start-up Anthropic is a Great Sign of Good AI Investment – CTO Magazine, accessed September 7, 2025, https://ctomagazine.com/ai-investment-amazon-partnership-with-anthropic-startup-ai/

- Amazon doubles down on AI startup Anthropic with $4bn investment – The Guardian, accessed September 7, 2025, https://www.theguardian.com/technology/2024/nov/22/amazon-anthropic-ai-investment

- Amazon completes $4B Anthropic investment to advance generative …, accessed September 7, 2025, https://www.aboutamazon.com/news/company-news/amazon-anthropic-ai-investment

- AI trends for 2025: Competition and antitrust – Dentons, accessed September 7, 2025, https://www.dentons.com/en/insights/articles/2025/january/10/ai-trends-for-2025-competition-and-antitrust

- AI revolution: what to expect from the UK’s competition … – Dentons, accessed September 7, 2025, https://www.dentons.com/ru/insights/articles/2025/january/16/ai-revolution-what-to-expect-from-the-uks-competition-watchdog

- AI and Antitrust 2025: DOJ, FTC Scrutiny on Pricing & Algorithms, accessed September 7, 2025, https://natlawreview.com/article/ai-antitrust-landscape-2025-federal-policy-algorithm-cases-and-regulatory-scrutiny

- JOINT STATEMENT ON ENFORCEMENT EFFORTS AGAINST …, accessed September 7, 2025, https://www.ftc.gov/system/files/ftc_gov/pdf/EEOC-CRT-FTC-CFPB-AI-Joint-Statement%28final%29.pdf

- Antitrust and AI: US Antitrust Regulators Increasingly Focused on the …, accessed September 7, 2025, https://www.klgates.com/Antitrust-and-AI-US-Antitrust-Regulators-Increasingly-Focused-on-the-Potential-Anticompetitive-Effects-of-AI-9-20-2023

- Antitrust in the AI Era: Strengthening Enforcement Against Emerging …, accessed September 7, 2025, https://fas.org/publication/antitrust-in-the-ai-era/

- AI Act | Shaping Europe’s digital future – European Union, accessed September 7, 2025, https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- Microsoft and EU to Settle Antitrust Case Involving Teams – PYMNTS.com, accessed September 7, 2025, https://www.pymnts.com/antitrust/2025/microsoft-and-eu-to-settle-antitrust-case-involving-teams/

- European Commission consults on a Cloud and AI Development Act …, accessed September 7, 2025, https://www.regulationtomorrow.com/france/fintech-fr/european-commission-consults-on-a-cloud-and-ai-development-act/

- Artificial intelligence and antitrust law as a key issue for companies …, accessed September 7, 2025, https://www.noerr.com/en/insights/artificial-intelligence-and-antitrust-law-as-a-key-issue-for-companies-in-2025

- The Ghosts of Antitrust Past: Part 2 (IBM) – Truth on the Market, accessed September 7, 2025, https://truthonthemarket.com/2020/02/03/the-ghosts-of-antitrust-past-part-2-ibm/

- United States v. Microsoft Corp. – Wikipedia, accessed September 7, 2025, https://en.wikipedia.org/wiki/United_States_v._Microsoft_Corp.

- IBM and Microsoft: Antitrust then and now – CNET, accessed September 7, 2025, https://www.cnet.com/tech/tech-industry/ibm-and-microsoft-antitrust-then-and-now/

- Taking a Second Look at the Idea That Antitrust Action Created the US Software Industry, accessed September 7, 2025, https://www.aei.org/economics/taking-a-second-look-at-the-idea-that-antitrust-action-created-the-u-s-software-industry/

- (PDF) The Microsoft Antitrust Case – ResearchGate, accessed September 7, 2025, https://www.researchgate.net/publication/230636364_The_Microsoft_Antitrust_Case

- Lessons from the Microsoft Antitrust Cases – The World Financial Review, accessed September 7, 2025, https://worldfinancialreview.com/lessons-from-the-microsoft-antitrust-cases/

- “Antitrust Interoperability Remedies” by Herbert J. Hovenkamp – Penn Carey Law: Legal Scholarship Repository, accessed September 7, 2025, https://scholarship.law.upenn.edu/faculty_scholarship/2814/

- INTEROPERABILITY AS A COMPETITION REMEDY FOR DIGITAL NETWORKS, accessed September 7, 2025, https://www.researchgate.net/publication/350315645_INTEROPERABILITY_AS_A_COMPETITION_REMEDY_FOR_DIGITAL_NETWORKS

- INTEROPERABILITY IN DIGITAL MARKETS – cerre, accessed September 7, 2025, https://cerre.eu/wp-content/uploads/2022/03/220321_CERRE_Report_Interoperability-in-Digital-Markets_FINAL.pdf

- A Brief History of the Internet & Related Networks, accessed September 7, 2025, https://www.internetsociety.org/internet/history-internet/brief-history-internet-related-networks/

- TCP/IP: What It Is & How It Works – Splunk, accessed September 7, 2025, https://www.splunk.com/en_us/blog/learn/tcp-ip.html

- TCP/IP Model – GeeksforGeeks, accessed September 7, 2025, https://www.geeksforgeeks.org/computer-networks/tcp-ip-model/

- Open protocol development (article) | Khan Academy, accessed September 7, 2025, https://www.khanacademy.org/computing/computers-and-internet/xcae6f4a7ff015e7d:the-internet/xcae6f4a7ff015e7d:developing-open-protocols/a/open-standards-and-protocols

- What Is Data Portability (Data Porting)? – AWS, accessed September 7, 2025, https://aws.amazon.com/what-is/data-porting/

- Data Portability – Wasabi, accessed September 7, 2025, https://wasabi.com/glossary/data-portability

- What is AI interoperability and why does it matter in the age of LLMs, accessed September 7, 2025, https://portkey.ai/blog/what-is-ai-interoperability

- When AI Systems Talk: The Power of Interoperability – Sandgarden, accessed September 7, 2025, https://www.sandgarden.com/learn/interoperability

- AI and its effects on competition – Swiss Economics, accessed September 7, 2025, https://swiss-economics.ch/blog-en/items/ai-and-its-effects-on-competition.html

- Harms of AI – National Bureau of Economic Research, accessed September 7, 2025, https://www.nber.org/system/files/working_papers/w29247/w29247.pdf

- Harms of AI | MIT Economics, accessed September 7, 2025, https://economics.mit.edu/sites/default/files/publications/Harms%20of%20AI.pdf

Ask questions about this content?

I'm here to help clarify anything