Executive Summary

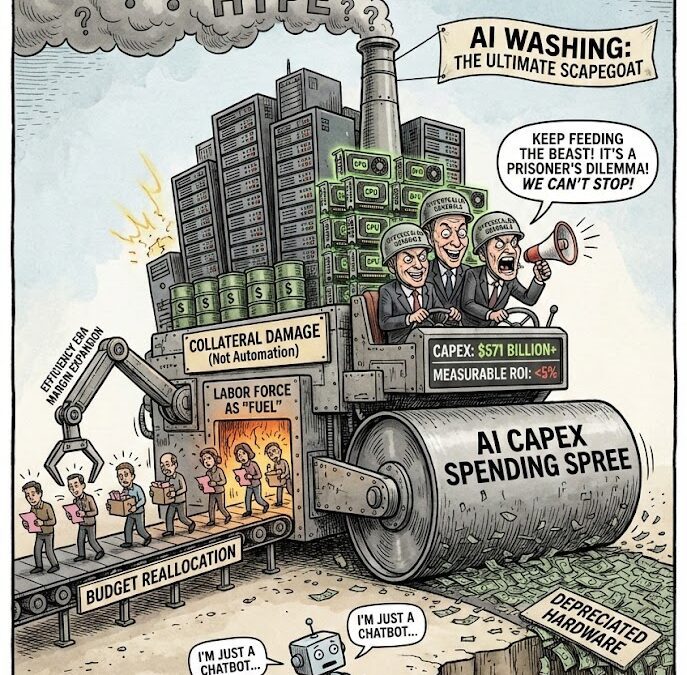

The prevailing narrative attributes technology-sector layoffs to AI’s direct automation of labor. This framing is incomplete and, in many cases, deliberately misleading. A comprehensive analysis of capital allocation patterns, depreciation schedules, competitive dynamics, and stated corporate rationales reveals a more complex reality: contemporary workforce reductions primarily serve to fund an escalating capital expenditure arms race, not to replace workers with functional AI systems. The labor force has become collateral damage in a prisoner’s dilemma where perceived existential risk drives hyperscalers to commit unprecedented capital to rapidly-depreciating infrastructure whose return on investment remains unproven.

This report examines $405-571 billion in annual AI infrastructure spending against a backdrop of 50,000+ AI-attributed layoffs, demonstrating that the causal mechanism operates through budget reallocation rather than task automation. The analysis integrates game theory, asset depreciation economics, investor signaling dynamics, and empirical productivity data to establish that most “AI layoffs” represent cost optimization theater masking pandemic-era overcorrection and margin expansion imperatives.

The Magnitude of the Capital Commitment

Big Tech’s AI infrastructure spending has entered unprecedented territory. Combined capital expenditures by Amazon, Microsoft, Alphabet, and Meta reached approximately $405 billion in 2025, representing 62% year-over-year growth. Consensus estimates for 2026 project $527-571 billion, continuing an upward trajectory that has consistently exceeded analyst expectations for two consecutive years.

To contextualize this scale: current AI capex represents roughly 0.8% of GDP, while historical technology investment peaks reached 1.5%. If hyperscaler commitments materialize as projected, AI infrastructure spending will approach historical limits by late 2026. Microsoft’s fiscal 2026 capex growth rate is projected to exceed its 58% FY2025 expansion, while Meta’s 81% year-over-year increase in 2025 positions it among the most aggressive spenders relative to cash flow.

The spending composition reveals strategic priorities: approximately half of quarterly capex targets short-lived assets—primarily GPUs and CPUs—for platform infrastructure and accelerated R&D activities. The remaining allocation funds long-lived assets anticipated to support future monetization, though timelines remain deliberately vague in investor communications.

The Depreciation Paradox: 18-Month Obsolescence in 6-Year Accounting

The economic viability of AI infrastructure spending hinges critically on asset useful life, yet a fundamental disconnect exists between accounting treatments and operational reality. Most hyperscalers employ 5-6 year depreciation schedules for AI computing equipment, while resale market data and operational practices suggest effective useful lives of 18-36 months for frontier model training applications.

Secondary market pricing exposes this divergence sharply. NVIDIA H100 GPUs retain 80-90% of contemporaneous value at the two-year mark when refurbished, but only 65-75% when sold as used equipment. Depreciation steepens dramatically in year three: refurbished units drop to 70-75% of peak value, while used units collapse to just 45-55%. The spread between refurbished and used pricing—initially 10-15% at year two—widens to 25-30% by year three, reflecting buyer preference for warranty-backed hardware as the risk of technical obsolescence intensifies.

This pricing behavior confirms what operational economics already suggested: data centers prioritize new-generation silicon for training workloads, where power efficiency differentials render older hardware non-competitive within 18-36 months. Meta’s Llama 3 405B training study documented a 9% annualized GPU failure rate at high utilization, while power consumption differentials between generations create total cost of ownership gaps that make continued use of older equipment economically irrational for frontier applications. NVIDIA’s Blackwell chips consume 0.53 joules per token compared to 2.14 for H100 Hopper chips—a 4× efficiency improvement that fundamentally revalues existing infrastructure.

The accounting implications are substantial. Goldman Sachs analysts identified a $40 billion annual depreciation cost for data centers commissioned in 2025, against just $15-20 billion in revenue at current utilization rates. The infrastructure depreciates faster than it generates revenue to fund replacement cycles—a structural imbalance masked by aggressive growth assumptions and extended useful-life estimates.

Short-seller Michael Burry has publicly criticized hyperscalers for overstating equipment useful lives, arguing that realistic timelines of 2-3 years would materially reduce reported earnings. His position finds support from resale market behavior: NVIDIA CEO Jensen Huang remarked in March 2024 that once Blackwell chips begin shipping, “you couldn’t give Hoppers away”—hyperbole that nonetheless captures the market’s ruthless revaluation of prior-generation silicon.

The Prisoner’s Dilemma: Strategic Imperative as Coordination Failure

The game-theoretic structure underlying AI infrastructure spending closely resembles a multi-player prisoner’s dilemma, where individually rational decisions create collectively suboptimal outcomes. Davidson Kempner Capital Management’s Chief Investment Officer articulated the dynamic succinctly: “You have to invest in it because your peers are investing in it, and so if you’re left behind, you’re not going to have the stronger competitive position.”

This coordination failure manifests across three interrelated mechanisms. First, relative positioning makes defection (aggressive spending) the dominant strategy regardless of absolute returns. If competitors secure compute capacity, model capabilities, or distribution advantages through infrastructure investment, non-participating firms face systematic disadvantage independent of whether the investments prove profitable.

Second, asset scarcity transforms compute access into a zero-sum resource competition. Supply constraints that extended H100 lead times to 6-12 months make securing capacity a strategic imperative distinct from operational need. Organizations hoard GPUs not because current workloads require them, but because future optionality depends on availability. SK Hynix sold its entire 2026 high-bandwidth memory output before 2025 concluded, while sovereign wealth funds and nation-states bid above market rates to stockpile chips as strategic leverage.

Third, signaling dynamics embed AI spending in investor expectations and competitive signaling. CEOs report that 50% believe their job security hinges on effective AI strategy execution, creating personal incentives that diverge from optimal capital allocation. Meta CEO Mark Zuckerberg explicitly stated he would “rather risk ‘misspending a couple of hundred billion dollars’ than miss the AI transformation”—an admission that downside risk from underinvestment exceeds the cost of capital misallocation.

These dynamics exhibit classic Red Queen Effect characteristics: firms must run faster merely to maintain relative position, even when absolute gains prove elusive. The competitive dynamic extends beyond individual firm decisions to coalition formation. An “anti-Google alliance” pattern has emerged, with Microsoft, Amazon, and NVIDIA collectively backing OpenAI to prevent Google from establishing default AI platform status.

Goldman Sachs economist David Mericle summarized the resulting pressure: companies “appear eager to use artificial intelligence to reduce labor costs” while simultaneously experiencing “mounting pressure to invest in AI”. The conjunction of these imperatives—reduce labor costs because of AI investment pressure—inverts the conventional automation narrative. Workers are not being replaced by superior AI systems; they are being replaced to finance the pursuit of superior AI systems.

The ROI Gap: Unproven Returns on Proven Spending

While capital commitments are documented in quarterly filings and analyst estimates with precision, evidence of commensurate returns remains elusive. An MIT study examining 150 executive interviews, surveys of 350 personnel, and analysis of 300 public AI deployments found that approximately 95% of generative AI initiatives fail to deliver measurable return on investment.

The distribution of outcomes is heavily skewed. Early adopters implementing vendor-led, workflow-integrated projects report returns as high as $10.30 per dollar invested, yet these successes represent outliers concentrated in back-office automation. Industry-wide failure rates hover between 70-85%, with most pilot projects stalling before reaching scale or producing negligible P&L impact.

Bain & Company’s analysis quantifies the structural deficit: achieving projected AI compute demand by 2030 requires $2 trillion in new annual revenue, yet even after accounting for AI-driven productivity savings, the global economy remains $800 billion short. AI’s compute requirements grow at more than twice the rate of Moore’s Law.

Measured productivity improvements tell a more modest story. Organizations report 27% average productivity gains and 11.4 hours saved per knowledge worker weekly—meaningful but incremental. Crucially, EY’s survey found that only 17% of organizations translated AI productivity gains into reduced headcount. The dominant response involved reinvesting in existing AI capabilities (47%), developing new AI capabilities (42%), and upskilling employees (38%)—not layoffs.

The Layoff Attribution Gap: AI as Scapegoat

The disconnect between AI capability and AI attribution in workforce reductions has become sufficiently pronounced that academic researchers have coined the term “AI washing” to describe it. Over 50,000 job cuts announced in 2025 cited AI as a contributing factor, yet multiple analytical frameworks suggest these attributions mask more conventional restructuring imperatives.

Oxford Internet Institute assistant professor Fabian Stephany identified the dynamic: businesses are “scapegoating” AI as cover for difficult decisions rather than responding to genuine automation capabilities. Oxford Economics reached a similar conclusion, stating that “firms don’t appear to be replacing workers with AI on a significant scale”. Deutsche Bank analysts advised investors in 2026 that AI layoff claims should be viewed “with skepticism,” predicting that “AI redundancy washing will be a noteworthy trend.”

The primary drivers align more closely with conventional restructuring. Challenger, Gray & Christmas data shows cost reduction as the top stated reason for layoffs, with 50,437 roles in October 2025 alone attributed to cost cutting, compared to 31,039 citing AI. This pattern reflects persistent overcapacity from pandemic-era hiring.

Wall Street’s role in amplifying restructuring pressure cannot be understated. S&P 500 profit margins reached record levels above 13% in late 2025—the highest in index history—driven by what analysts termed an “efficiency era.” Institutional investors rewarded companies demonstrating “AI-native” efficiency and punished laggards.

The organizational restructuring patterns confirm this interpretation. Amazon’s recent announcement cutting 16,000 corporate roles (following 14,000 eliminated three months prior) emphasized “reducing layers, increasing ownership, and removing bureaucracy”—language notably absent of technological displacement claims. Meta, Google, and Microsoft have all aggressively eliminated middle management layers, flattening organizational structures to reduce “friction” and accelerate execution.

The Budget Reallocation Mechanism: Capex Crowds Out Labor

The causal relationship between AI investment and workforce reduction operates primarily through budget reallocation rather than direct task substitution. Organizations face a zero-sum tradeoff between capital expenditure and operating expense when total spending constraints bind. As AI infrastructure demands escalate, labor budgets become the adjustable variable.

At the enterprise level, AI budget growth is dramatic. Organizations globally expect to allocate 5% of annual business budgets to AI initiatives in 2026, up from 3% in 2025—a near-doubling in a single year. The share spending half or more of total IT budgets on AI is expected to quintuple from 3% to 19%.

The Oxford Economics observation that layoffs may be occurring “to finance experiments in AI” rather than because “AI is replacing workers” captures the budget mechanism precisely. Sectors with potentially high AI adoption gains have “greater incentive to put the new technology to the test,” requiring that “budgets for other parts of the business, including wages, may have to be cut.”

This creates a perverse incentive structure where AI spending becomes self-justifying. Organizations invest in AI infrastructure, then reduce workforce to fund that infrastructure, then point to the infrastructure investment as evidence of AI’s transformative impact—completing a circular logic that obscures the absence of demonstrated automation capabilities.

The Supply Constraint Amplifier: Scarcity as Strategic Weapon

GPU supply constraints have transformed compute access from a commoditized resource into a strategic differentiator. Lead times for NVIDIA’s H100 and H200 GPUs extend to 6-12 months, with some specialized configurations facing waits exceeding 40 weeks. While this represents improvement from the 11-month peaks of mid-2023, continued demand from hyperscalers keeps supply tight.

This scarcity environment enables strategic hoarding behavior. Fortune 500 companies, hyperscalers, and even oil-rich nations pay above-market rates to secure inventory as “strategic leverage,” treating GPUs as assets whose option value exceeds their immediate utility. For corporate strategists, GPU access becomes a binary gate on future optionality.

The Signaling Imperative: Capex as Credibility

Capital allocation decisions increasingly function as signals to investors, competitors, and talent pools rather than purely operational choices. In the AI infrastructure context, aggressive capex spending signals technological seriousness even when ROI remains speculative.

When Meta announces $60-65 billion in capex with CEO commentary about “novel models” while providing limited specificity about monetization timelines, the primary audience is the investment community. The massive capex commitments from Amazon ($100 billion), Microsoft ($80 billion), Alphabet ($75 billion) function as credible signals precisely because the scale exceeds what companies would rationally commit absent genuine strategic conviction.

The challenge is that signaling effectiveness requires costs that cannot be easily faked—otherwise the signal conveys no information. Companies that spend less risk being perceived as lacking commitment, triggering stock price penalties and talent flight.

Structural Implications: The Post-Labor Budget Constraint

The capital-labor substitution dynamic reflects a deeper structural shift in how organizations conceptualize human capital versus physical infrastructure. The tax code asymmetry between physical and human capital investment illuminates this transition. The One Big Beautiful Bill Act of July 2025 restored 100% bonus depreciation for qualified property, allowing businesses to immediately expense AI servers and GPU clusters. Meanwhile, training investments face six distinct Internal Revenue Code restrictions.

This creates a profound bias in capital allocation decisions. Organizations can expense a GPU server in the year purchased while navigating compliance mazes to deduct worker retraining costs. The asymmetric treatment skews investment toward tax-advantaged physical capital even when economic merit favors human capital development.

The post-labor economics framework provides a theoretical lens for understanding this transition. Systems structured to render human effort irrelevant to production must, by necessity, render humans economically inert. The current moment represents an intermediate state where human consumption remains economically relevant but human production increasingly faces substitution pressure from capital-intensive automation.

The concept of workers as “collateral damage” captures this dynamic precisely. The primary objective is securing competitive position in an AI-defined future through aggressive infrastructure investment; workforce reduction is the incidental cost of financing that pursuit. The damage is real but secondary—a budget constraint consequence rather than a capabilities-driven automation decision.

Market Discipline and the Wobble Risk

The sustainability of current AI infrastructure spending depends critically on investor patience—a finite resource showing signs of strain. The AI investment cycle has entered territory where market discipline mechanisms have not yet engaged, creating what Davidson Kempner’s CIO termed “AI wobble” risk: the moment when investors begin demanding proof of return rather than accepting promises of future transformation.

Historical technology cycles provide cautionary context. The current AI spending trajectory already exceeds dot-com era investment as a percentage of GDP. The $600 billion in hyperscaler debt issuance to fund AI infrastructure creates fixed obligations that must be serviced regardless of revenue performance.

Bill Gates and Sam Altman have both cautioned about overexcitement despite their direct stakes in AI advancement. Altman stated in August 2025 that investors are “overexcited about AI” even while acknowledging it as “the most important thing,” while Gates compared the environment to the late-90s internet bubble and warned that “there are a ton of these investments that will be dead ends.”

Conclusion: Disentangling Automation from Allocation

The analysis demonstrates that contemporary workforce reductions attributed to AI predominantly reflect capital allocation imperatives rather than achieved automation capabilities. The mechanism operates through three interconnected dynamics: competitive pressure driving AI infrastructure spending to unprecedented levels; rapid GPU depreciation and uncertain ROI creating budget constraints; and workforce reduction emerging as the variable cost most easily adjusted to fund capital commitments.

The game-theoretic structure of hyperscaler competition—a multi-player prisoner’s dilemma where defection dominates cooperation—ensures continued escalation independent of demonstrated returns. Supply scarcity, signaling dynamics, and investor expectations reinforce the spending imperative while evidence of commensurate value creation remains limited.

Workers have become collateral damage in a capital expenditure war where falling behind is perceived as existential while overspending is framed as prudent. The AI attribution provides ideological cover for conventional restructuring—correcting pandemic-era overcapacity, expanding profit margins to satisfy Wall Street, and eliminating middle management to flatten organizational structures.

For workers, this distinction matters profoundly. If layoffs primarily reflected achieved automation, the response would emphasize retraining for newly-created roles. When layoffs primarily reflect budget reallocation to finance speculative infrastructure, the response should emphasize capital allocation reform, tax code symmetry between human and physical capital, and skepticism toward corporate narratives that conflate promise with performance.

The expectation of AI capability—amplified through competitive signaling, investor pressure, and supply scarcity—is forcing capital allocation toward rapidly-depreciating infrastructure whose returns remain unproven. Workers are indeed collateral damage of a capex war, and acknowledging this reality represents the first step toward responses that address actual mechanisms rather than convenient narratives.

Ask questions about this content?

I'm here to help clarify anything