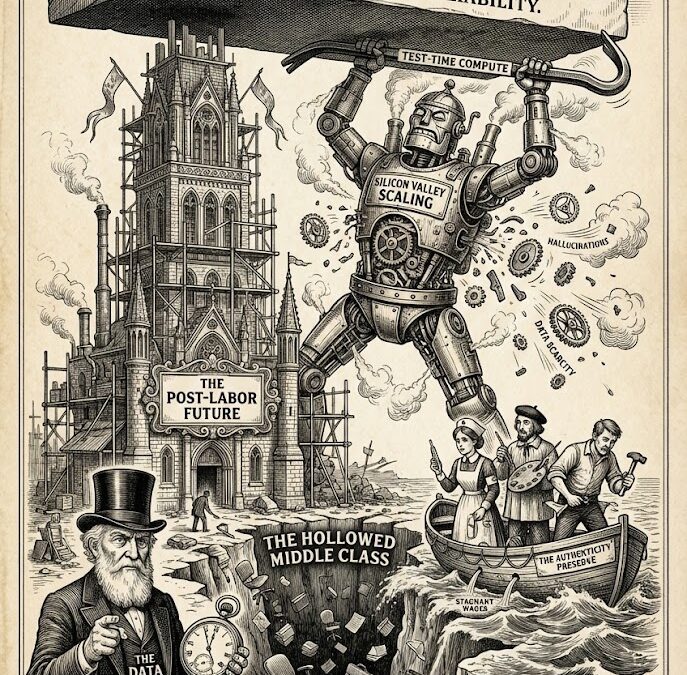

The post-labor thesis can fail in only two fundamental ways.

First, artificial intelligence could encounter durable technical ceilings that permanently preserve large domains of human labor. Second, even if AI capability continues advancing, human preference for human-produced goods and services could sustain employment at scale through authenticity premiums. If either pathway holds strongly enough, the thesis collapses.

This essay examines both possibilities. The conclusion is not that these pathways are irrelevant—but that neither currently offers decisive falsification. Technical ceilings appear real but unstable. Authenticity demand is strong but structurally constrained. Together, they point not to preservation of labor share, but to a reshaping of where—and under what conditions—human work persists.

I. Technical ceilings: real friction, uncertain permanence

Across current frontier research, six capability domains consistently resist full automation. The critical question is not whether these limits exist, but whether they are fundamental or merely transitional.

1. Embodied manipulation and physical cognition

Human dexterity remains unmatched. The human hand has over 20 degrees of freedom and dense tactile sensing; current robotic manipulators remain brittle, energy-intensive, and limited to controlled environments. Soft-object manipulation, sensor fusion, and real-time adaptation in unstructured settings continue to fail outside laboratories.

Yet the barrier here is ambiguous. Hardware constraints—actuator density, power efficiency, sensor resolution—may slow progress, but do not yet constitute proof of impossibility. Commercial humanoids remain constrained to mapped environments, but capital investment and learning curves suggest this ceiling is temporal, not absolute.

2. Common-sense reasoning and world modeling

Large language models still fail at abstract reasoning benchmarks humans solve effortlessly. FrontierMath performance remains low; ARC-AGI tasks expose brittleness; premise-order sensitivity persists. Critics argue these failures reflect architectural limitations rather than data scarcity.

At the same time, the field is actively abandoning pure scaling. World models, joint embedding architectures, and test-time reasoning systems reopen capability curves. Whether these approaches overcome common-sense reasoning deficits remains unresolved—but the research direction itself undermines claims of permanent ceiling.

3. Emotional labor and social intelligence

Here the case for durable human advantage is strongest. Empathy is not merely recognition—it involves emotional cost, commitment, and relational signaling. AI can simulate affect, but cannot bear emotional burden.

Empirical evidence reinforces this distinction. In care settings, automation reduces human interaction and increases loneliness. Seniors overwhelmingly prefer human caregivers for emotional support. Unlike other ceilings, this one is not reducible to better data or compute.

4. High-stakes reliability and accountability

AI systems remain probabilistic, opaque, and brittle in edge cases. Larger models often hallucinate more convincingly, increasing supervisory risk. Long-horizon autonomous tasks still fail completely in safety evaluations. Certification regimes in aviation, medicine, and infrastructure struggle to reconcile probabilistic systems with accountability requirements.

This ceiling is as much institutional as technical. Even if raw capability improves, liability frameworks may continue anchoring responsibility to humans.

Capability trajectories point to transformation, not stasis

Claims that “scaling has hit a wall” are incomplete. Pretraining returns are diminishing, but test-time compute scaling and reinforcement-driven reasoning have reopened performance curves. Models now trade speed for cognition—an entirely new axis of improvement.

Capital signals reinforce this uncertainty. Investment remains enormous despite efficiency concerns. Expert timelines diverge sharply, ranging from near-term transformation to decade-plus horizons requiring architectural breakthroughs.

Net assessment: Technical ceilings exist—but with the exception of emotional labor and accountability, their permanence is unproven. They slow substitution; they do not yet block it.

II. Authenticity demand: real preference, limited absorption

Even if AI capability advances, labor could be preserved through human preference. Consumers consistently express discomfort with AI in healthcare, customer service, and creative work. AI-labeled art sells at steep discounts. Handmade goods command premiums.

This is not anecdotal—it is robust across surveys, experiments, and markets.

But preference alone does not determine labor outcomes. Scale and wage structure do.

Authenticity premiums are real…

Healthcare shows persistent aversion to AI involvement—even among knowledgeable users. Customer service surveys repeatedly show strong preference for human agents. Creative markets demonstrate measurable price penalties for AI attribution.

These signals are stable across domains and demographics—with one exception.

…but generational erosion is a structural risk

Trust in AI differs sharply by cohort. Younger generations show far higher acceptance and adaptability. Exposure effects reduce skepticism further. The most authenticity-sensitive cohort is aging out of peak consumption years.

This does not eliminate authenticity demand—but it caps its durability as a system-wide labor buffer.

The binding constraint is wages, not demand

Human-intensive sectors employ millions—but at low pay.

Care work, the largest authenticity-protected domain, pays wages below living standards. Nearly half of workers rely on public assistance. Comparable entry-level knowledge work pays significantly more.

Even optimistic estimates suggest authenticity-protected roles could absorb 8–25% of displaced workers—and at lower wages. This produces a hollowed middle, not preservation of labor share.

Authenticity sustains employment, not economic parity.

III. Falsification thresholds: what would overturn the thesis

The post-labor thesis would be falsified by clear evidence along either pathway.

Technical falsification by 2030 would require:

- Persistent stagnation on reasoning benchmarks despite architectural shifts

- Long-horizon task success remaining below 20%

- Physical manipulation failing to generalize beyond controlled environments

- AI deployment confined to narrow task sets after sustained investment

Authenticity falsification by 2030 would require:

- Durable >20% price premiums across multiple sectors

- Care wages exceeding $20/hour (real terms)

- Significant unionization or bargaining power in authenticity sectors

- Authenticity-protected employment expanding >15% of total labor

None of these conditions are currently met.

Conclusion: constraint without rescue

Technical ceilings slow automation, but do not yet stop it. Authenticity demand preserves human work, but not at scale or wage levels sufficient to stabilize labor’s share of income.

The most plausible outcome under current evidence is not a post-work world, nor a preserved labor economy—but bifurcation: high-wage human accountability roles alongside low-wage service and care work, with a shrinking middle.

This does not falsify the post-labor thesis. It refines it.

The decisive evidence will arrive not through speculation, but through measurable signals over the next five years: benchmark trajectories, wage transmission, generational preference shifts, and care-sector compensation. By 2030, we will know whether human labor is structurally preserved—or merely slowed on its way to marginalization.

The burden remains with the data.

Ask questions about this content?

I'm here to help clarify anything